Clusters and Storages

Overview

Clusters Management

Managing Spark infrastructure within a single cluster presents numerous challenges. Our goal is to ensure that the infrastructure has all the essential features, such as data visualization and monitoring of data flows. We also want it to be secure, with built-in replication and versioning capabilities. Additionally, monitoring the health and performance of our jobs is crucial. However, incorporating all these features requires time-consuming configuration, which adds complexity and cost.

Moreover, when transitioning to a multi-cluster infrastructure, these tasks often need to be replicated for each additional cluster, leading to potentially high costs. In addition, maintenance spendings requires a linear increase with each additional cluster.

Ilum not only offers automatic integration of all the features mentioned above into your data infrastructure, but it also makes transitioning to a multi-cluster architecture as seamless as possible. All you need to manage is networking and access

With Ilum, you can manage your multi-cluster architecture through a central control plane. Everything can be done within the Ilum application.

Storages Management

There are scenarios where using multiple storage solutions in your data infrastructure becomes necessary. This could be due to cost considerations, unique features offered by different providers, or requirements to have storage in multiple regions to reduce network latency. However, integrating multiple storages into a Spark architecture often involves repetitive and time-consuming tasks, such as configuring each Spark job individually to access the storage.

Ilum simplifies this process for you. All you need to do is configure the storage once by adding the authentication details when attaching it to the cluster. After that, all Ilum jobs are automatically authorized to read from and write to the storage, eliminating the need for manual configuration for each job.

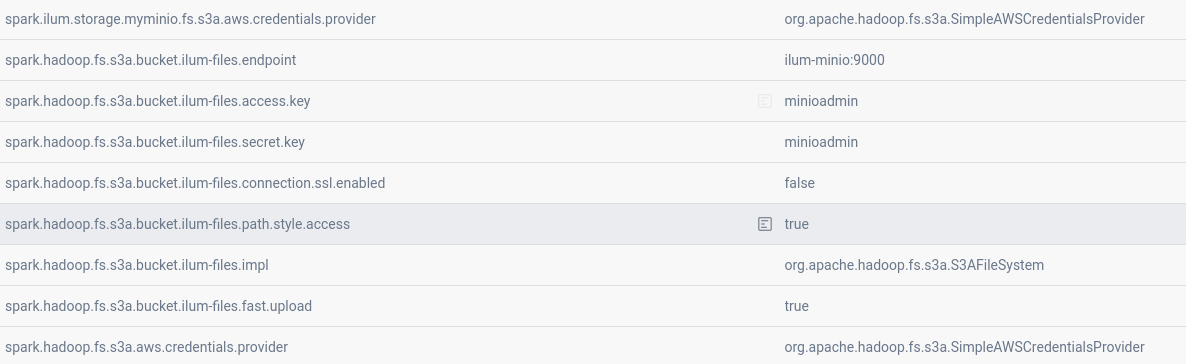

For example, when using built-in MinIO storage, each Ilum Jobs will be preconfigured with these spark parameters:

Similar parameters will be added for each storage in the cluster.

In Ilum you can make use of 4 types of storages: GCS, S3, WASBS, HDFS.

To learn more about how to add storages to clusters visit Storages Addition Guide.

Centralized Jobs Management

Problem

Let's look at an example. We have 10 clusters on different regions and we want to deploy a job on one of them. How would it look without Ilum?

We would need to update kubectl config to set the chosen cluster context as current.

kubectl config use-context cluster_i_context

This means, that we would need to contain a huge kubeconfig file with each single cluster written there as a context:

apiVersion: v1

clusters:

- cluster:

certificate-authority: /path/to/ca-1.crt

server: https://<cluster-1-ip>:6443

name: cluster-1

...

- cluster:

certificate-authority: /path/to/ca-n.crt

server: https://<cluster-n-ip>:6443

name: cluster-n

contexts:

- context:

cluster: cluster-1

namespace: default

user: user-1

name: cluster-1-context

...

- context:

cluster: cluster-n

namespace: default

user: user-n

name: cluster-n-context

current-context: cluster-i

kind: Config

preferences: {}

users:

- name: user-1

user:

client-certificate: /path/to/client/certificate-1.crt

client-key: /path/to/client/key-1.key

...

- name: user-n

user:

client-certificate: /path/to/client/certificate-n.crt

client-key: /path/to/client/key-n.key

Such an approach introduces several challenges. Consider the following scenarios:

-

Lost Kubeconfig: What happens if you lose your kubeconfig file? It would disrupt your access to the cluster, requiring manual recovery or regeneration.

-

Certificate Dependency: What if the kubeconfig relies on a certificate stored locally on your PC, and you lose it? Restoring access would become cumbersome.

-

Multi-User Access: If you want to grant cluster access to multiple people, would you need to distribute the kubeconfig file and certificates to everyone? This process is not only inefficient but also poses security risks.

-

Certificate Updates: What happens when the certificates expire or need updating? You'd have to update every user's kubeconfig file and certificates, adding further complexity.

Even if you resolve all these issues, you still face the task of creating a Spark job. For instance, you could submit a job using the following command:

spark-submit \

--master k8s://https://<kubernetes-api-server>:6443 \

--deploy-mode cluster \

--name spark-app \

--class com.example.MyApp \

--conf spark.executor.instances=5 \

--conf spark.kubernetes.container.image=<spark-image> \

/path/to/application.jar

And you would need to run this command every time you rescale the job or update configurations, leading to a lot of unnecessary effort and inefficiency.

Solution

Ilum eliminates all these problems with its streamlined approach:

- Single-Time Setup: Simply add the cluster’s certificates once to connect Ilum to your cluster, and you're done. No need to manage lengthy kubeconfig files or track multiple certificates and keys.

- No Engineer-Side Configuration: Your engineers won’t have to configure cluster connections manually or deal with kubeconfig files.

- UI-Driven Management: Forget about using kubectl for deploying Spark jobs. With Ilum, everything is handled through an intuitive UI.

To get started, simply add a cluster by following the appropriate guide/l

If you need to update certificates or any other cluster configurations, simply click the Edit button for the desired cluster and follow the same guides.

Note: Jobs launched with Ilum require access to the centralized control plane. To ensure this, you’ll need to expose Ilum services to the outside world and create external services in your remote cluster.

Once your cluster is connected navigate to the Cluster List and select the cluster where you want to deploy your Spark job. From here you can deploy Ilum Jobs following these guides:

- How to run a simple Spark Job

- How to run an interactive Spark Job

- How to run an interactive Code Group

With Ilum’s Interactive Jobs feature, you can configure Spark parameters, upload files, deploy a Spark pod once, and run your Spark applications multiple times without redeploying. Furthermore, the Interactive Code Groups feature allows you to execute Spark code directly within the Ilum UI.

At any time, you can:

- Edit your job’s Spark parameters or assigned resources.

- Rescale the job as needed.

- Restart or delete jobs directly from the UI without ever using the console.

Ilum simplifies cluster and Spark job management, saving time and reducing operational complexity.

Centralized Monitoring

In Ilum you can monitor all vital information about your data infrastructure from Central Control Plane

History Server

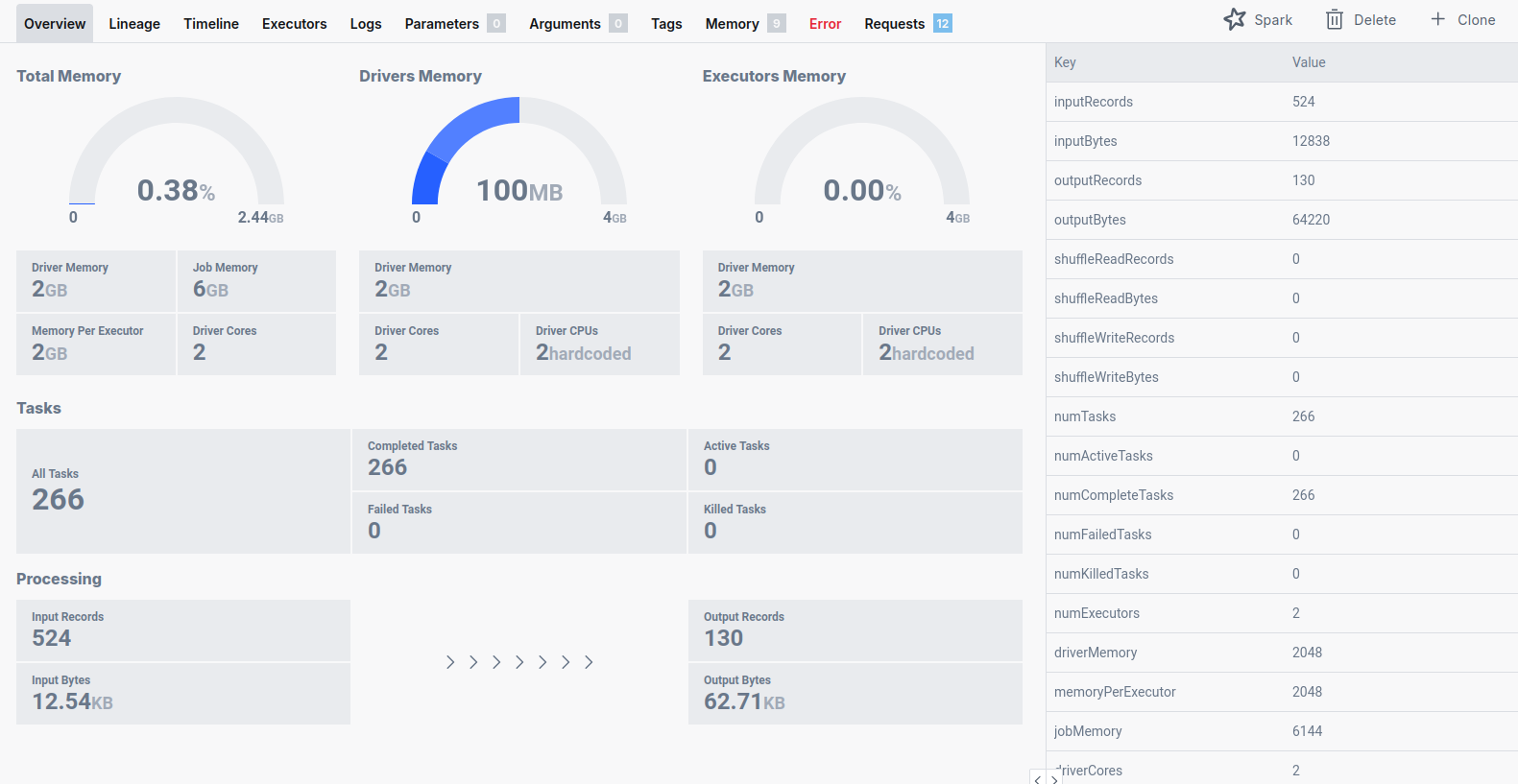

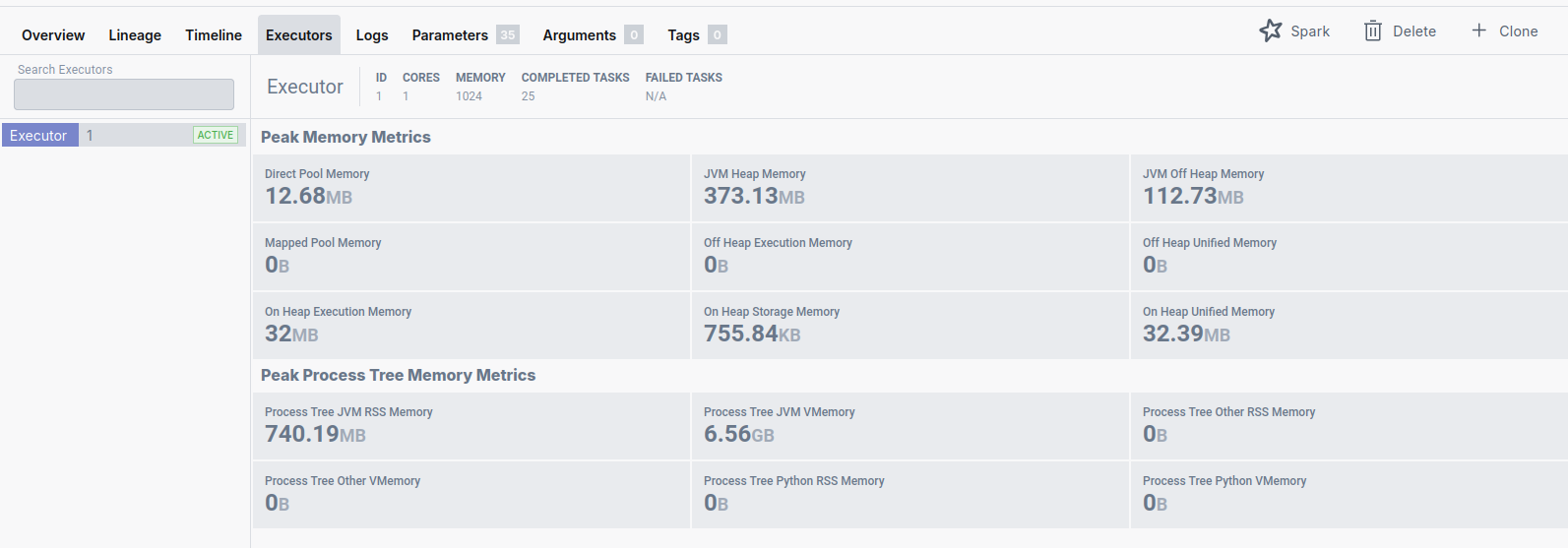

When Spark runs your application, it creates an execution plan, breaking it down into separate stages, jobs, and tasks. Along the way, it tracks key metrics such as the number of rows and bytes of data transferred between stages, as well as other vital performance details.

In Ilum, all jobs send this information to the Event Log, which is then organized by the History Server. This allows you to conveniently analyze the data directly within the Ilum UI.

The Event Log is stored on the default Ilum Storage. To collect data from Spark jobs running on remote clusters, you’ll need to expose the storage. Detailed instructions for this process can be found on the GKE Addition Guide

For more information about the History Server and how to monitor your jobs, visit the Monitoring Page

Graphite

Graphite is a metrics collection tool similar to Prometheus but operates on a push-based model, making it particularly well-suited for use in a multi-cluster environment.

All Ilum jobs are preconfigured to push their metrics data to Graphite, enabling centralized monitoring of your applications and infrastructure.

To enable Graphite, follow the instructions provided in the Monitoring page . Once enabled, configure your clusters to integrate with Graphite by referring to the GKS addition page.

Data Lineage

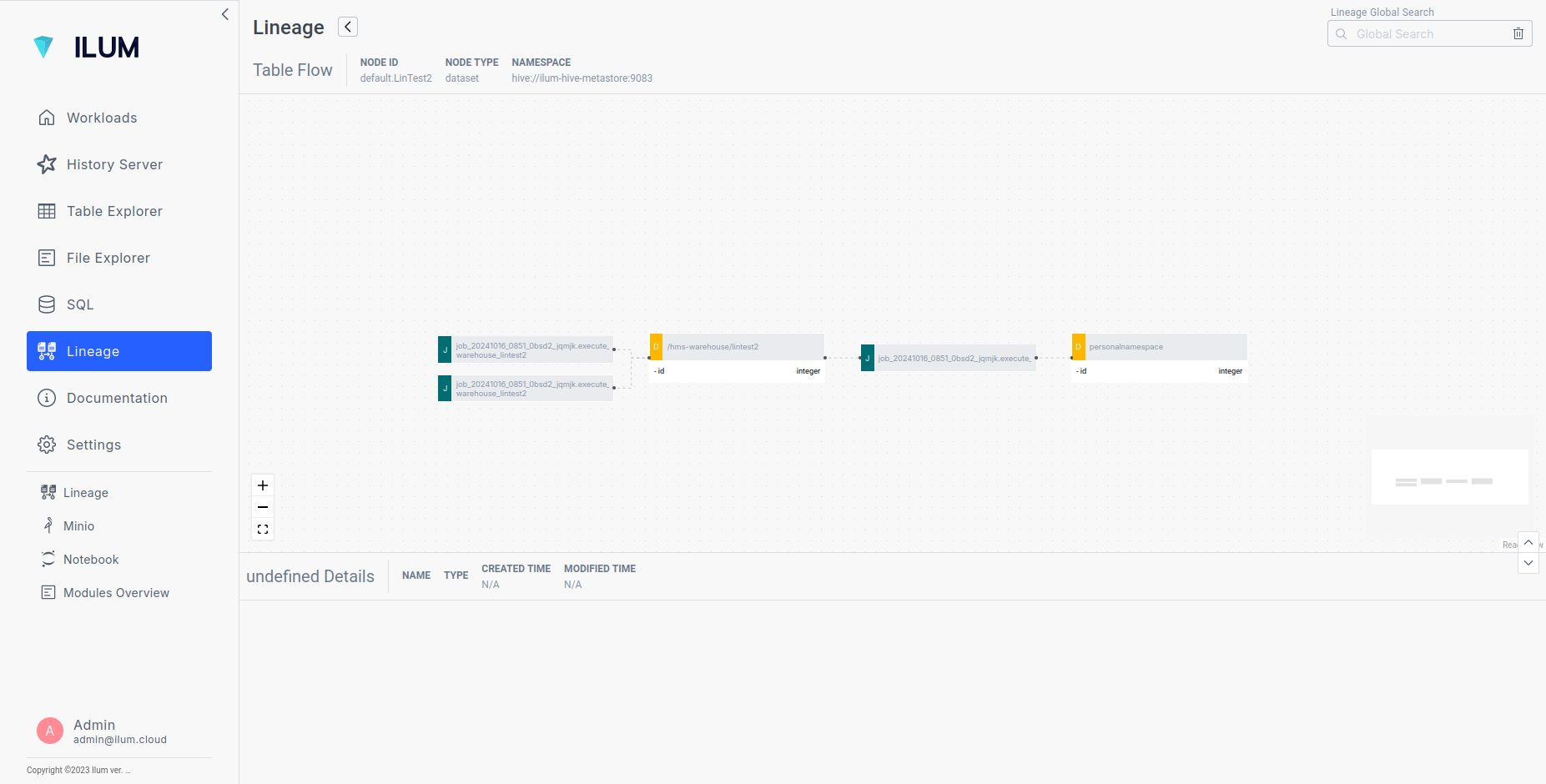

Lineage is an Ilum feature that lets you visualize the relationships between datasets and jobs within your projects.

For instance, you can view a dataflow visualization showing how two jobs ingest data into storage, after which another job processes this data into the final dataset. This provides a clear and intuitive understanding of how your data moves and transforms across the pipeline.

Such visualizations are created automatically by Ilum thanks to Metadata Database: each Ilum Job is preconfigured to send metadata about jobs to the database. Ilum UI uses this data to present relationships between jobs and datasets.

By exposing this metadata database to the remote clusters you can easily observe data flow in multi-cluster architecture. To learn more about how to expose it visit GKS addition page

To learn more about Data Lineage visit Data Lineage page.

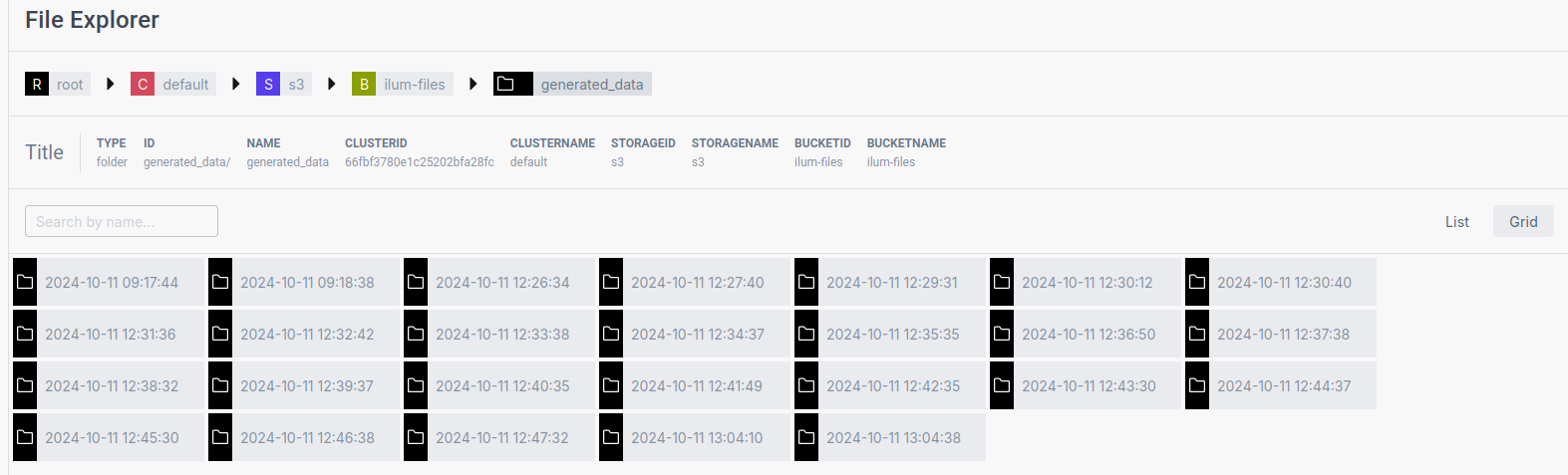

File explorer

The File Explorer is an Ilum feature that enables you to view metadata for objects stored across all storage systems on all your clusters. It significantly simplifies storage monitoring by eliminating the need to switch between different IAM configurations, service accounts, or tools to access the contents of your storages. Additionally, it removes the hassle of sharing storage access with your team members, as everyone can conveniently view storage content directly through the Ilum UI.

To learn more about it visit File Explorer page

Links

Centralized Data Analytics

Ilum allows you to integrate all the tools to make it possible to make data analytics through Ilum UI

Hive Metastore

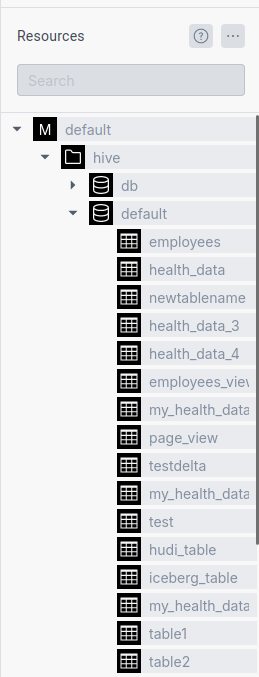

Hive Metastore is a tool that allows you to save your Spark Catalogs (tables, views etc) on a long-term storage. To learn more about it visit Table Explorer page.

In a multi-cluster architecture metastores would allow you to organize your data in to tables, so that it could be used by Table Explorer

However, you need to expose Hive Metastore Server to remote clusters. You can do this following instructions from GKE Addition Guide.

Table Explorer

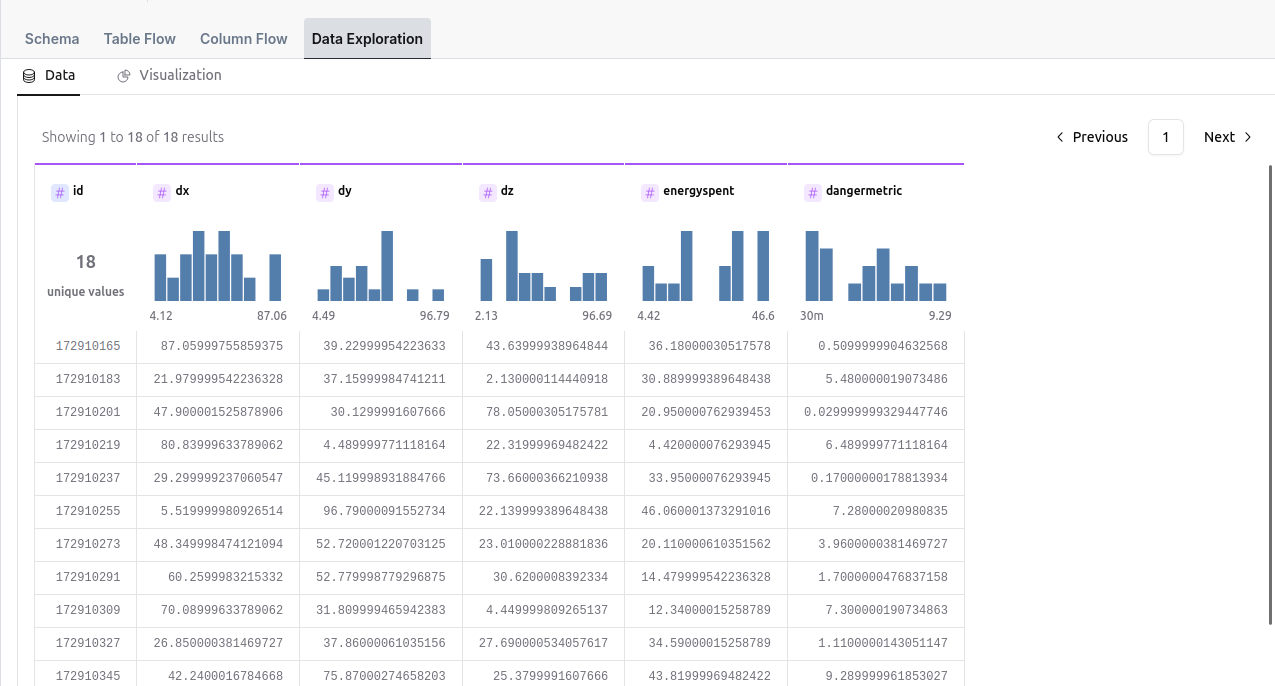

In table Explorer you can explore the tables from Hive Metastore:

Moreover, you can create charts with different formulas on a small portion of data from a table by using Ilum Data Exploration Tool

To learn more about it visit Table Explorer page.

Ilum SQL

Similar to the Data Exploration Tool, you can access small portions of data by applying SQL operations. However, Ilum SQL provides greater flexibility by enabling you to run complex SQL queries on tables from the Hive Metastore, allowing for more advanced data exploration and analysis.

To learn more about it visit Ilum SQL page

Mutltiple Metastores

Currently, Ilum supports only a single Hive Metastore, which introduces a challenge of increased network latency. This occurs because Ilum jobs that rely on Hive Catalogs must fetch metadata from a remote cluster, potentially slowing down job execution due to the added network overhead.

We are actively working on addressing this limitation to improve performance and provide a more seamless experience for jobs that require access to Hive metadata across multiple clusters