Schedule

Overview

The Ilum Schedule lets you effortlessly set up and manage schedules for individual jobs through an intuitive, user-friendly interface. It simplifies the process of configuring execution timelines with ease.

Under the hood, this feature leverages Kubernetes CronJobs, which use CRON expressions to precisely define when jobs should run. At the scheduled time, the CronJob triggers a request to Ilum's backend, which orchestrates the creation of a single job.

Use cases

Data Ingestion

Periodically fetch data from external APIs or other sources and load it into a database or file system for further processing or analysis.

Data Pipeline Orchestration (ETL)

Automate Spark-based ETL (Extract, Transform, Load) jobs that extract raw data from multiple sources, apply complex transformations, and load the results into a data warehouse

Reports preparation and Data Aggregation for analytics

Schedule a Spark job to aggregate large datasets from various sources (e.g., logs, sales data, user interactions) into summary tables that are used in dashboards and reports.

Data Cleanup

Schedule a Spark job that performs data cleanup, such as removing duplicates, correcting invalid entries, or filtering data, in a large dataset stored in a distributed system

Get started

Scheduled Python Job

The example below is a simple Spark job in Python that generates random data and writes it to an S3 bucket. This job will be scheduled to run at a specified time interval.

-

Write a single job

from pyspark.sql import SparkSession

from pyspark.sql import DataFrame

from pyspark.sql import Row

import random

from datetime import datetime

def generate_sample_data(n):

return [Row(id=i, name=f"Name_{i}", value=random.randrange(70, 100)) for i in range(n)]

if __name__ == "__main__":

spark = SparkSession.builder \

.appName("My Spark Job") \

.getOrCreate()

data = generate_sample_data(100)

df = spark.createDataFrame(data)

current_datetime = datetime.now()

current_timestamp = current_datetime.strftime("%Y-%m-%d %H:%M:%S")

output_path = f"s3a://ilum-files/generated_data/{current_timestamp}"

df.write.mode("overwrite").parquet(output_path)

spark.stop()Save the file as

<your filename>.py -

Create a schedule

When creating your schedule, specify the following:

- General Tab:

- Name: Name of the schedule

- Cluster: The cluster on which the job will run

- Language: Python in this case

- Class:

<your filename>without the.pyextension

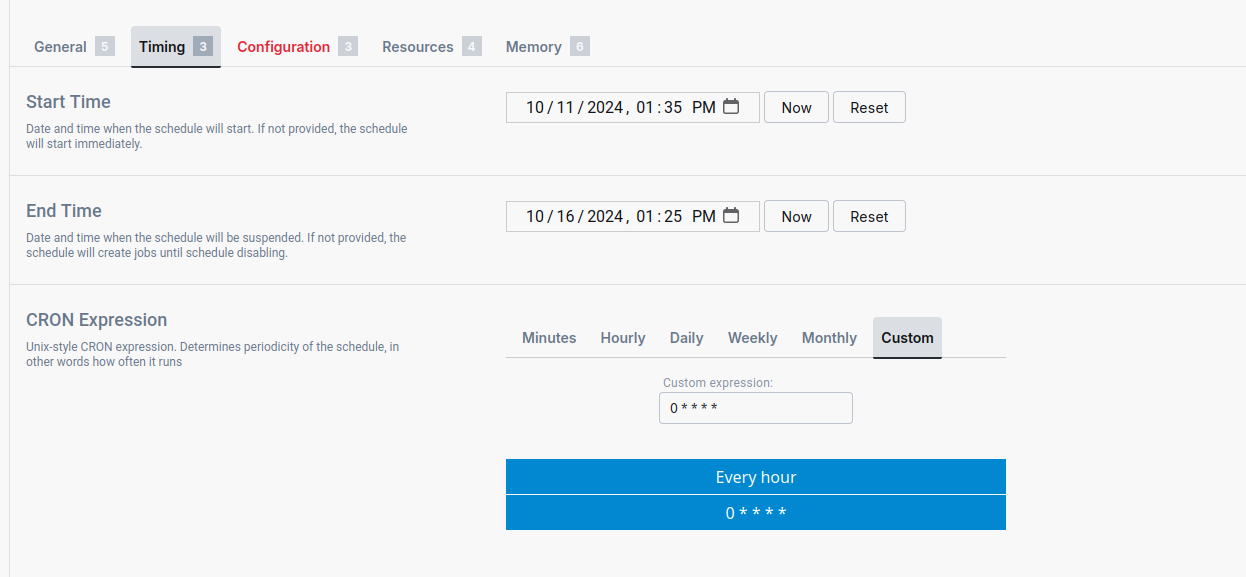

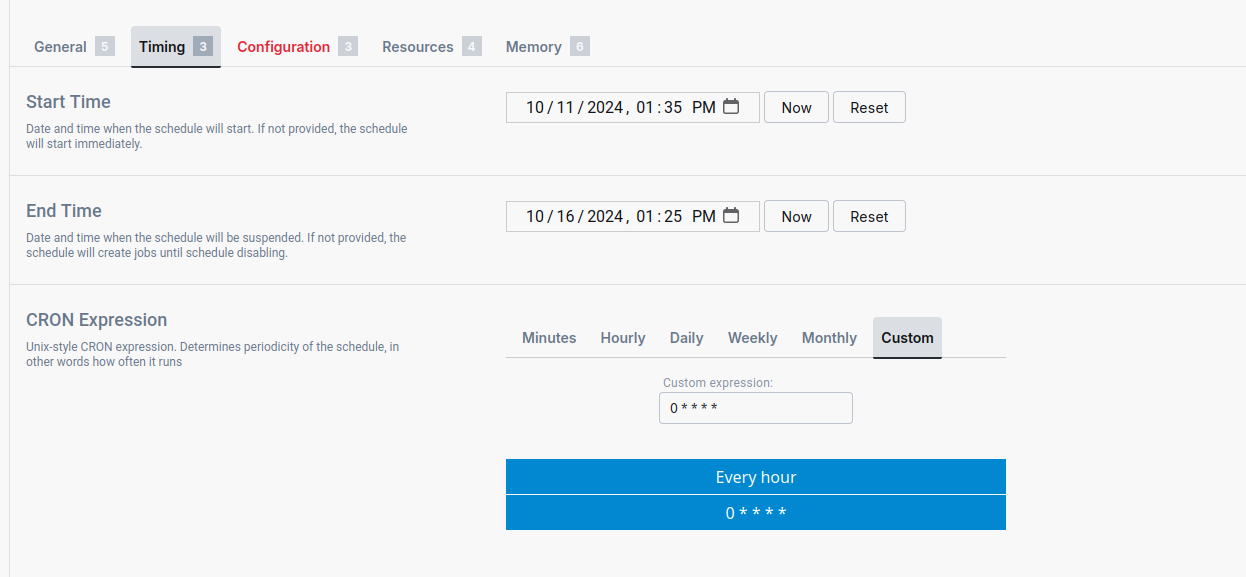

- Timing Tab:

- Pick a value that suits your needs. A custom CRON expression can be used if the predefined options don’t meet your requirements.

- Pick a value that suits your needs. A custom CRON expression can be used if the predefined options don’t meet your requirements.

- Resources Tab:

- PyFiles: Upload the Python script

- General Tab:

-

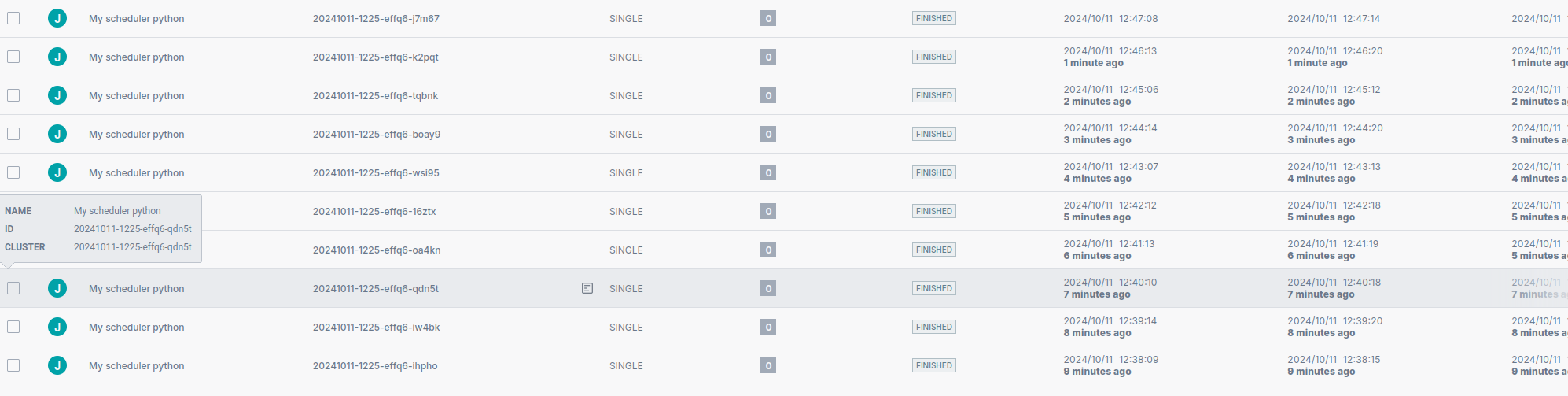

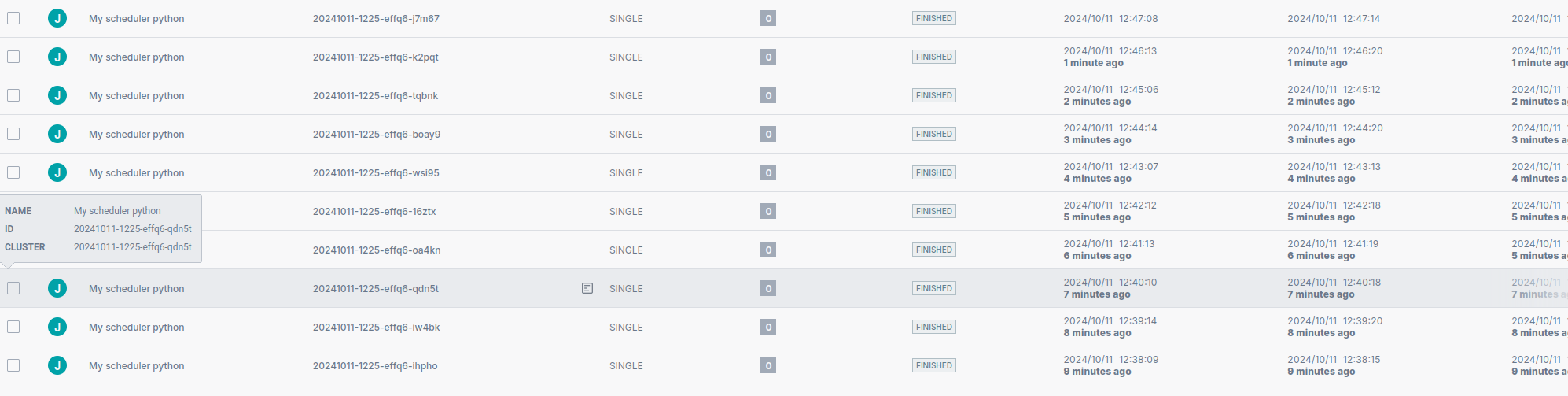

Observe logs of appearing jobs

After you create the schedule, it is already active and will launch jobs according to the settings you specified.

Single jobs launched by the schedule behave in the same way as regular Ilum single jobs: e.g., you can go to the Jobs tab to see the list of jobs that have been launched by the schedules.

Scheduled Scala Job

This example is the same as the Python one, but written in Scala.

-

Create a sbt project

While creating the project, it is important to include the

spark-sqldependency in thebuild.sbtfile.libraryDependencies += "org.apache.spark" %% "spark-sql" % "3.5.3" % "provided"Keep in mind that the version of the dependency should match the version of Spark running on the cluster.

-

Write & compile a single job

import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType}

import org.apache.spark.sql.{Row, SparkSession}

import java.time.LocalDateTime

import java.time.format.DateTimeFormatter

import scala.util.Random

object Main {

private def generateSampleData(n: Int): Seq[Row] = {

(0 until n).map(i => Row(i, s"Name_$i", Random.nextInt(30) + 70))

}

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder()

.appName("My Spark Job")

.getOrCreate()

val data = generateSampleData(100)

val df = spark.createDataFrame(

spark.sparkContext.parallelize(data),

StructType(

List(

StructField("id", IntegerType, nullable = false),

StructField("name", StringType, nullable = false),

StructField("value", IntegerType, nullable = false)

)

)

)

val currentDateTime = LocalDateTime.now()

val currentTimestamp = currentDateTime.format(DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss"))

val outputPath = s"s3a://ilum-files/generated_data/$currentTimestamp"

df.write.mode("overwrite").parquet(outputPath)

spark.stop()

}

}Now the project can be compiled into a JAR file. While normally you would want a 'fat JAR' with all dependencies included, this job is simple enough to run with dependencies provided by the cluster.

To quickly package the project, run the

sbt packagecommand. -

Create a schedule

When creating your schedule, specify the following:

- General Tab:

- Name: Name of the schedule

- Cluster: The cluster on which the job will run

- Language: Scala in this case

- Class: Canonical class name. In our case sbt should put the class file in the root of the JAR, so

Mainshould be enough.

- Timing Tab:

- Pick a value that suits your needs. A custom CRON expression can be used if the predefined options don’t meet your requirements.

- Pick a value that suits your needs. A custom CRON expression can be used if the predefined options don’t meet your requirements.

- Resources Tab:

- Jars: Upload the JAR file

- General Tab:

-

Observe logs of appearing jobs

After you create the schedule, it is already active and will launch jobs according to the settings you specified.

Single jobs launched by the schedule behave in the same way as regular Ilum single jobs: e.g., you can go to the Jobs tab to see the list of jobs that have been launched by the schedule.

Loading example schedule

Ilum provides an example schedule to help new users get started quickly.

Example schedule loading is enabled by default. However, you can disable it by using --set ilum-core.examples.schedule=false.

Tips

Leverage full power of CRON expressions

While Ilum comes with predefined options for scheduling, you can also use custom CRON expressions to set up more complex schedules.

Cron expression consists of 5 fields:

<minute> <hour> <day-of-month> <month> <day-of-week>

* - every time unit

? - any time unit (? in week-day field results in ignoiring the week-day)

- - range (1-5)

, - values: (1,5)

/ - increments (5/15 in minute field = 5, 20, 35, 50)

Examples:

Each hour:

0 * * * *

Each Sunday at 3 AM:

0 3 * * 0

Each 15 minutes every day:

0/15 0 * * ?

Every five minutes starting at 1 p.m., ending at 1:55 p.m. and then starting at 6 p.m. and ending at 6:55 p.m., every day:

0/5 13,18 * * ?

If you want to learn more, check out the Kubernetes' Cron Job documentation.

Test your job before scheduling

Scheduled jobs are launched periodically, so it is essential to ensure that the job works correctly. Because scheduled jobs behave in the same way as regular single jobs, You can test the execution by launching a single job before creating a schedule.

Schedule launches Ilum single jobs

Scheduled jobs are launched as Ilum single jobs. This means that all your configurations, logs, outputs, and other features available for single jobs are also available for scheduled jobs.

Turn off the schedules when not needed

If you don't need the job to be launched periodically, you should turn off the schedules to avoid resource waste. To do that, you can click on the Pause button in the Schedules tab.

Edit your schedules instead of creating a new one

If you want to change the configurations of an existing schedule, click Edit on it.