Lineage

What is Data Lineage?

Data lineage is a cornerstone of modern data governance and management. It provides a comprehensive and auditable view of the data lifecycle by tracking the flow of data from its origin to its final destination.

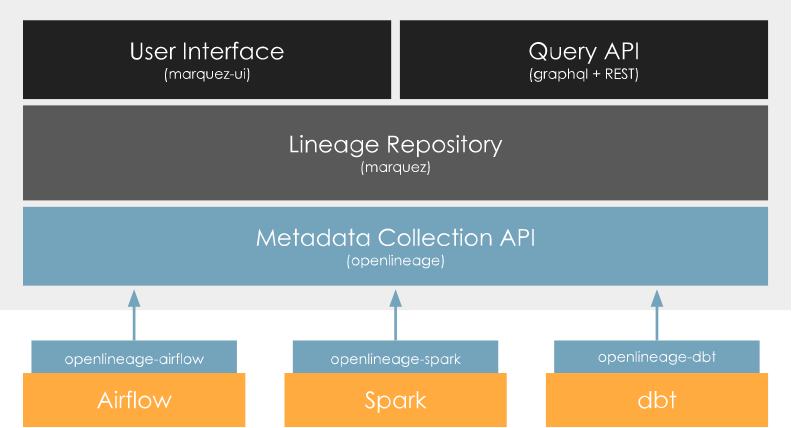

This is achieved by capturing operations on the data using standardized metadata formats such as OpenLineage and storing this information in a centralized repository like Marquez.

With lineage tracking, you can observe key metadata, including:

- Which processes consume a dataset

- Which processes modify datasets

- Which job produced a specific version of a dataset

Lineage services track only metadata - they do not store or provide access to the actual data itself.

In the context of Apache Spark, lineage tracking is typically integrated using an External Spark Listener Class. This listener observes key events such as job creation, execution, and dataset updates. When an event occurs, the listener sends the corresponding metadata to the lineage service for storage and analysis.

Why Data Lineage Matters

Data lineage is essential for modern data-driven organizations. Its benefits include:

- Regulatory Compliance: Maintains detailed audit trails, making it easier to meet legal and industry standards (e.g., GDPR, CCPA).

- Transparency: Offers a clear view of data origins, transformations, and destinations, fostering trust and informed decision-making.

- Troubleshooting: Enables quick tracing of issues to their source, reducing downtime and improving operational efficiency.

- Data Governance: Aligns data practices with governance policies, ensuring secure and ethical data usage.

- Impact Analysis: Allows IT teams to assess the downstream effects of changes, saving significant time on manual analysis.

Ilum Lineage

Ilum integrates Marquez as its lineage service. When Marquez is enabled, Ilum automatically configures jobs to use the OpenLineage listener and provides a user-friendly UI to visualize the data flow of your jobs.

By default, Marquez is not enabled. To learn how to enable it, refer to the Production page.

You can find the lineage page by clicking on the Lineage tab in the sidebar.

Ilum Jobs with Marquez

Integration with Marquez is achieved using the Spark OpenLineage listener. Every time a job is executed, Ilum adds the following configurations to the job:

spark.extraListeners=io.openlineage.spark.agent.OpenLineageSparkListener

spark.openlineage.transport.type=http

spark.openlineage.transport.url=<MARQUEZ_URL>

spark.openlineage.transport.endpoint=/api/v1/lineage

This configuration applies to all jobs, including those run by SQL execution engines.

If you enable lineage capturing, only jobs launched after that point will be captured.

Usage

Ilum provides lineage integration in two main areas:

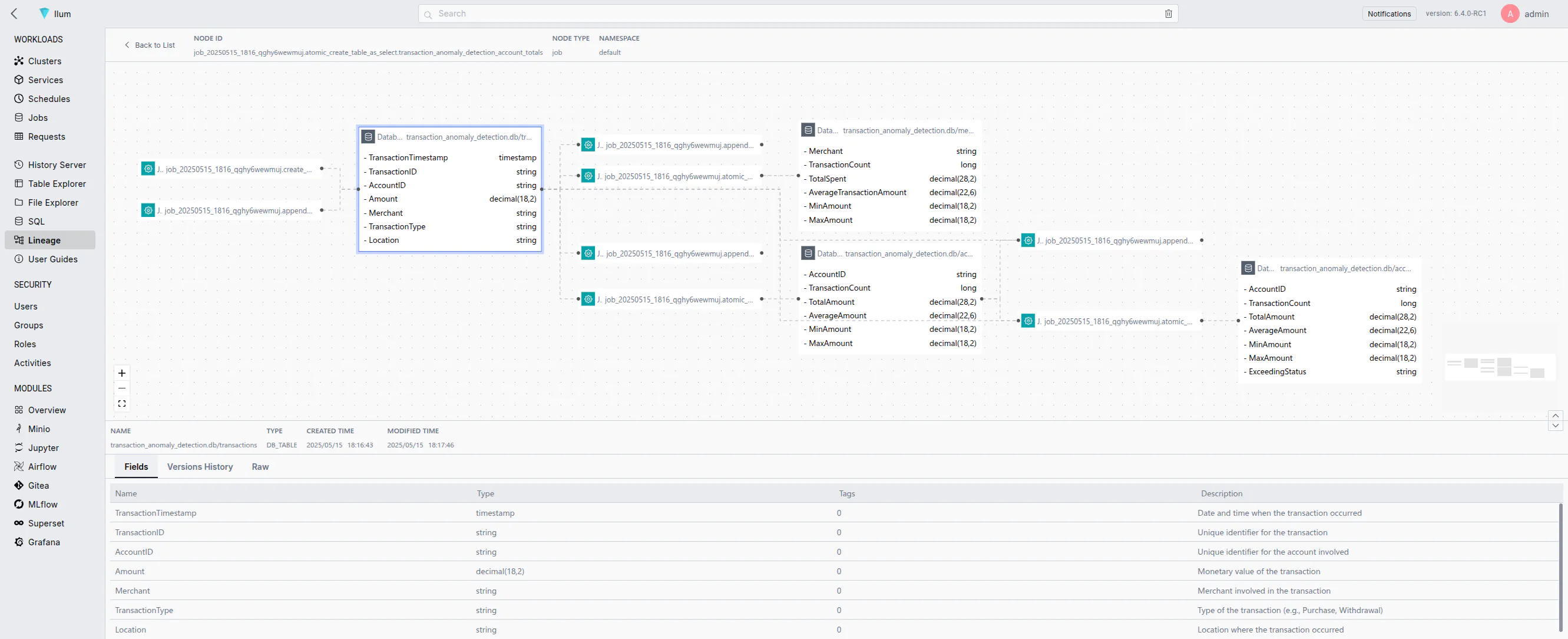

- Dedicated Lineage Page: Offers a per-job view of lineage data.

- Lineage in Table Explorer: Allows you to view the lineage of specific tables directly within the table explorer.

A sample lineage graph

A sample lineage graph

A lineage graph consists of two types of nodes:

- Job nodes: Represent jobs that produce or consume datasets.

- Dataset nodes: Represent datasets that are produced or consumed by jobs.

Edges between nodes illustrate the relationships between jobs and datasets.

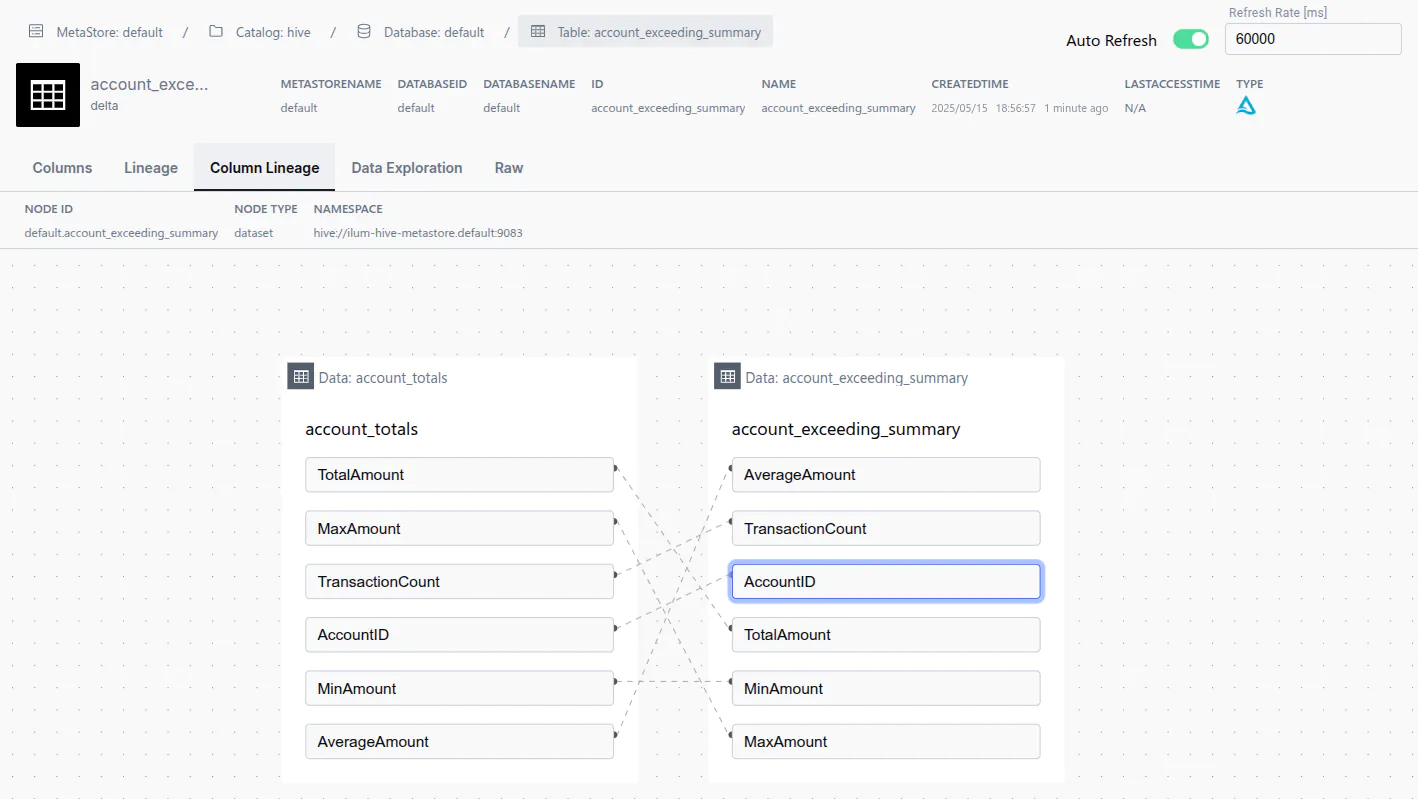

Within the data explorer, you can also view a column lineage graph, which shows the lineage of specific columns within a dataset.

A column lineage graph displays dependencies between columns in your data

A column lineage graph displays dependencies between columns in your data

Tips & Best Practices

Leverage OpenLineage Listener Features

While the OpenLineage listener is pre-configured with essential settings, it offers many advanced features. For example, you can set a job’s namespace or manually tag a dataset. For more details, see the OpenLineage documentation.

Keep the OpenLineage Listener Up to Date

The OpenLineage listener is updated frequently, with new features and metadata collection capabilities. To benefit from the latest improvements, ensure you are using the most recent version.

Example: Older versions of the OpenLineage listener did not support column-level lineage tracking.

Implement a Custom Spark Listener for Advanced Metadata

By default, Spark sends metadata about jobs and datasets to Marquez using the OpenLineageSparkListener (which implements the Spark Listener interface). If you need to capture additional or custom metadata, you can implement your own Spark Listener and attach it to Spark sessions:

spark.extraListeners=com.example.CustomListener

Below is a basic example of a custom Spark Listener in Scala:

import org.apache.spark.scheduler.{SparkListener, SparkListenerJobStart, SparkListenerJobEnd}

class CustomListener extends SparkListener {

override def onJobStart(jobStart: SparkListenerJobStart): Unit = {

// Custom logic to capture metadata when a job starts

println(s"Job started: ${jobStart.jobId}")

// You can send metadata to your lineage service here

}

override def onJobEnd(jobEnd: SparkListenerJobEnd): Unit = {

// Custom logic to capture metadata when a job ends

println(s"Job ended: ${jobEnd.jobId}, result: ${jobEnd.jobResult}")

// You can send additional metadata here

}

}

To use your custom listener, ensure it is available on the Spark classpath

and reference it in your Spark configuration properties as shown above.

The spark.extraListeners property is a comma-separated list, so you can include multiple listeners if needed.

Common Issues

No Lineage Data Captured

If you notice that no lineage data is being captured, consider the following troubleshooting steps:

- Ensure that the Marquez service is running and accessible.

- Make sure all dependencies for the OpenLineage listener are included in your Spark job.

- Check the logs of your Spark jobs for any errors related to the OpenLineage listener.

- Verify that both the URL and endpoint for Marquez are correctly configured in your Spark settings (ensure that any custom configurations are reflected in the job).