Monitoring

Ilum offers both basic and advanced monitoring capabilities by exposing detailed metrics for each Ilum job and associated clusters directly within the Ilum UI. Additionally, Ilum integrates with Prometheus and Graphite to collect metrics across the entire cluster, utilizes Grafana for visualizing these metrics with in-depth dashboards, and incorporates Loki and Promtail to efficiently manage logs.

Ilum Metrics

Ilum collects key information about each job and individual cluster, making it accessible to you through the Ilum UI.

Ilum Job Metrics

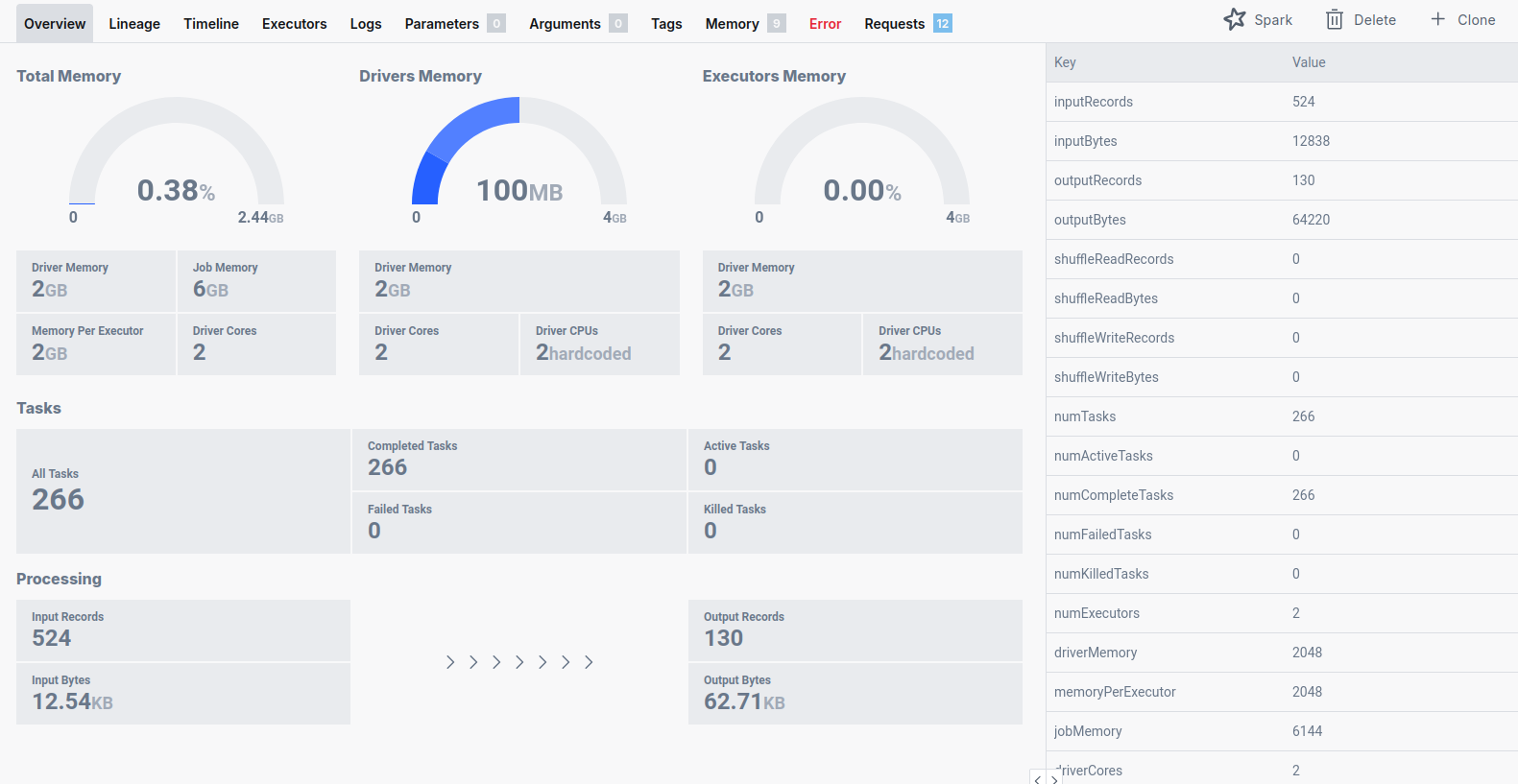

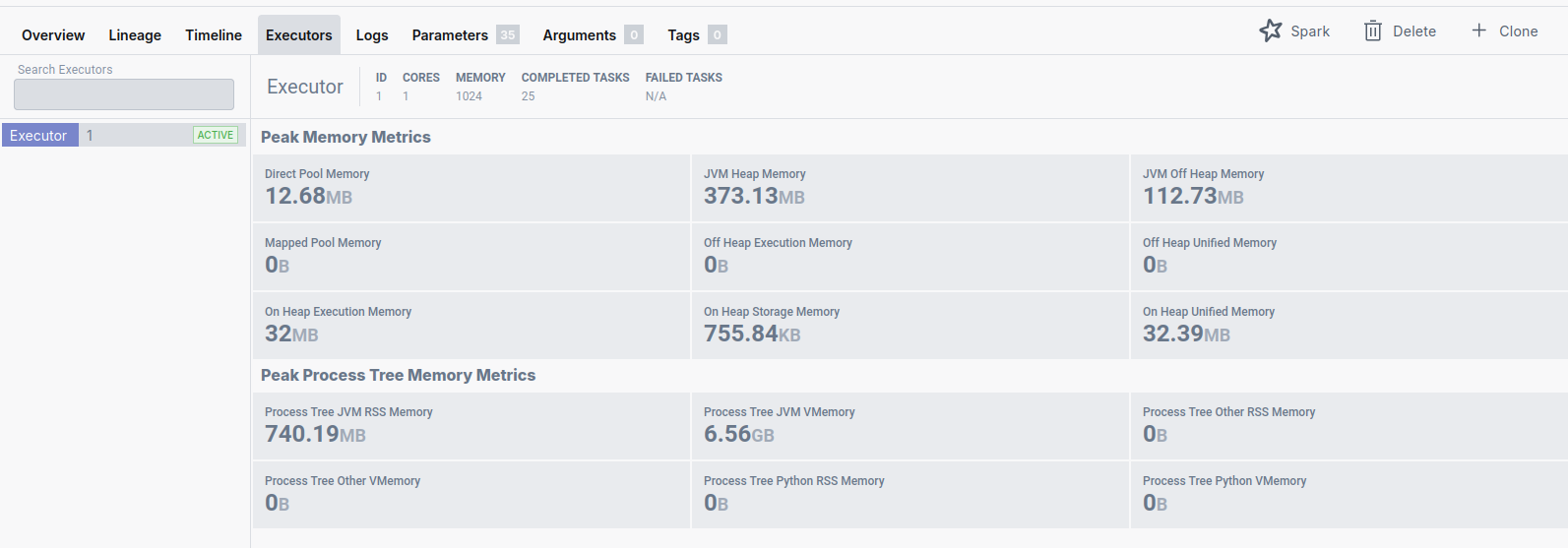

For each Ilum job, you can view the following details:

- Allocated Resources: The number of executors assigned to the job, the memory consumed by executors and the driver, and the number of CPUs and cores in use.

- Task Statistics: The total number of tasks, along with the count of currently running, completed, failed, or interrupted tasks.

- Data and Memory Statistics: Peak heap and storage memory usage, as well as the number of rows and bytes processed as input and output for the entire job and between individual stages.

- Logs: Access logs from the Spark driver and each executor directly within the Ilum UI.

- Executor Statistics: Memory usage details for each executor, broken down by memory type.

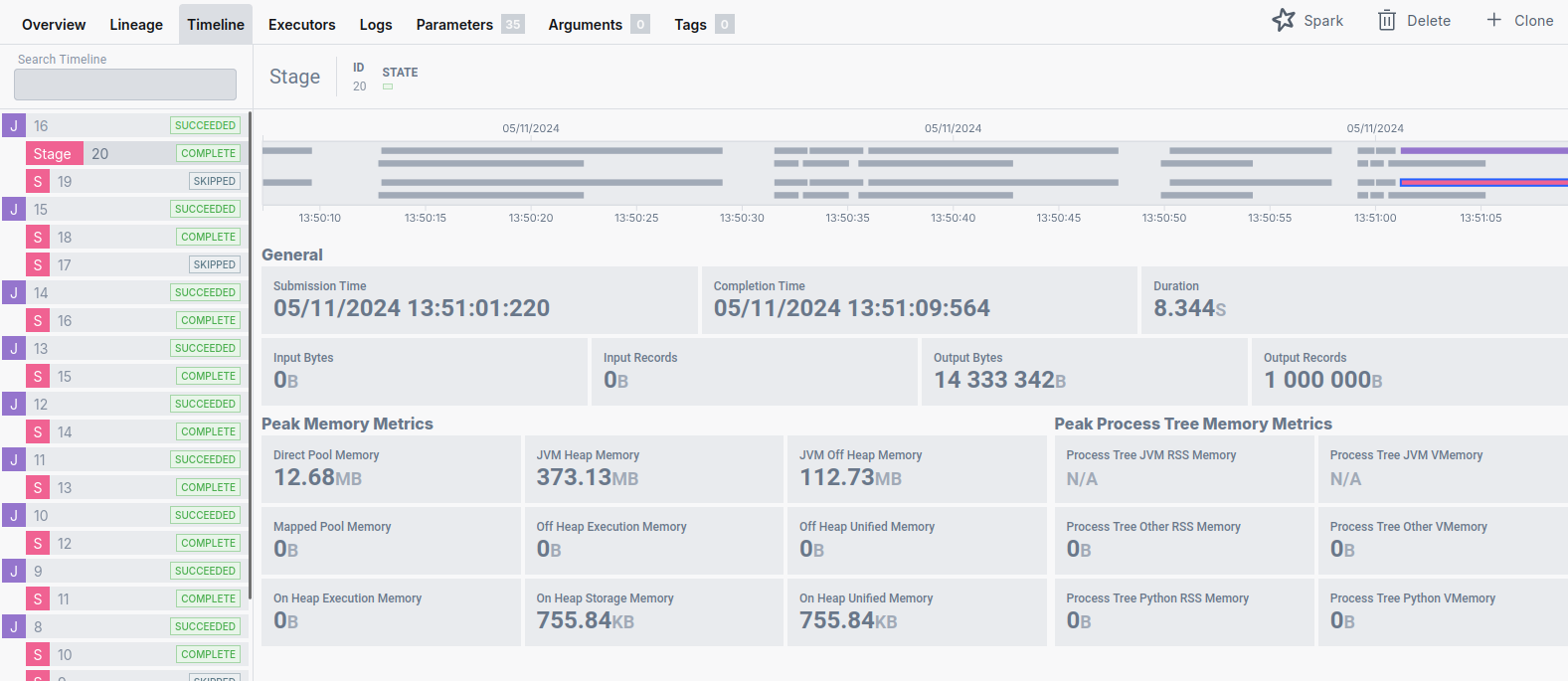

Ilum Job Timeline

In Spark, each application is divided into jobs and stages.

Spark Jobs are created when an action—such as collect, count, or write—is executed. Each of these actions triggers a separate Spark Job. Note: When we refer to Spark Jobs, we’re discussing Spark’s internal job concept, which is distinct from Ilum jobs.

Stages are generated whenever a shuffle operation occurs. Shuffle operations involve redistributing data across partitions, which cannot be performed within a single partition. Examples of these operations include groupByKey and join.

The Timeline module displays all Spark Jobs executed within an Ilum job, showing the stages contained in each Spark Job and their placement on a timeline. Additionally, for each stage, it provides detailed metrics such as execution time, memory usage, and input/output data size (in rows and bytes).

Clusters and Workload Overview

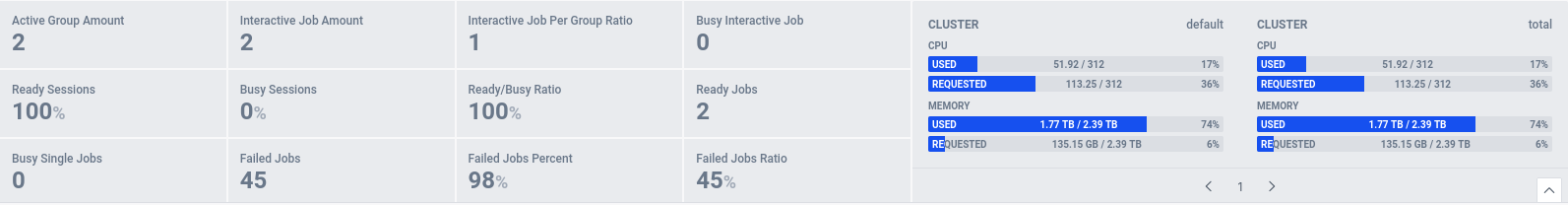

Ilum collects metrics for each added cluster both individually and in aggregate, tracking used and requested CPU and memory.

Additionally, you can monitor detailed Ilum job statistics:

How metrics are gathered?

All the metrics for individual Ilum Jobs are gathered using Ilum History Server.

Ilum Jobs Statistics are gathered from Ilum Server.

Cluster metrics are gathered directly from theirs APIs

Spark UI

Every Spark job provides a web interface that you can use to monitor the status and resource consumption of your Spark job. This UI is accessible from Ilum's UI level on job details page.

Spark History Server

To be able to access the UIs of completed Spark jobs, the ilum package includes a Spark History Server. The history server uses the default kubernetes storage configured with the ilum-core.kubernetes.* helm parameters to store spark events logs.

It is very important to provide access to this storage for each Spark job cluster that Ilum uses. The Spark history server is by default bundled in ilum package.

You can disable spark history server using a helm upgrade command. For instance:

helm upgrade \

--set ilum-core.historyServer.enabled=false \

--reuse-values ilum ilum/ilum

You can access Spark History Server from Ilum UI or using the port-forward command:

kubectl port-forward svc/ilum-history-server 9666:9666

All Ilum Jobs are preconfigured to write all of the information to the event logs:

spark.eventLog.enabled=true

spark.eventLog.dir=<event-log-storage-path>

Prometheus

Overview

Prometheus is a sophisticated system designed for monitoring and alerting. It collects metrics from specified endpoints and organizes them into time series data.

For example, Prometheus can capture data on CPU tasks, as well as CPU and memory usage for Ilum Jobs, which are accessible through HTTP endpoints. Prometheus pulls this data every five seconds and stores it in an internal database, using indexing and other optimization techniques to ensure queries remain efficient and lightweight.

Time series data can be retrieved using metric names and labels in the following format:

metric_name{metric_label=metric_value,...}

You can query the data using PromQL (Prometheus Query Language) directly from the Prometheus server interface. For more details on PromQL, visit the official Prometheus documentation page

Deployment

Prometheus is included into Ilum with kube prometheus stack. Take into account, that it is not enabled by default. You can enable it using a helm upgrade command. For instance:

helm upgrade \

--set kube-prometheus-stack.enabled=true \

--set ilum-core.job.prometheus.enabled=true \

--reuse-values ilum ilum/ilum

Kube prometheus stack also includes Grafana. Moreover, you can include Alertmanager by changing helm value:

helm upgrade \

--set kube-prometheus-stack.alertmanager.enabled=true \

--reuse-values ilum ilum/ilum

With this configuration prometheus will scrape metrics from all Spark jobs run by Ilum. In order to do this Ilum preconfigures PodMonitors to tell Prometheus to scrape metrics from Ilum Job Drivers and Core components.

Ilum Jobs are preconfigured to make their metrics available through an endpoint like this:

spark.ui.prometheus.enabled=true

spark.metrics.conf.*.sink.prometheusServlet.class=org.apache.spark.metrics.sink.PrometheusServlet

spark.metrics.conf.*.sink.prometheusServlet.path=/metrics/prometheus/

spark.executor.processTreeMetrics.enabled=true

spark.executor.metrics.pollingInterval=5s

You can access Prometheus UI using the port-forward command:

kubectl port-forward svc/prometheus-operated 9090:9090

Usage Example

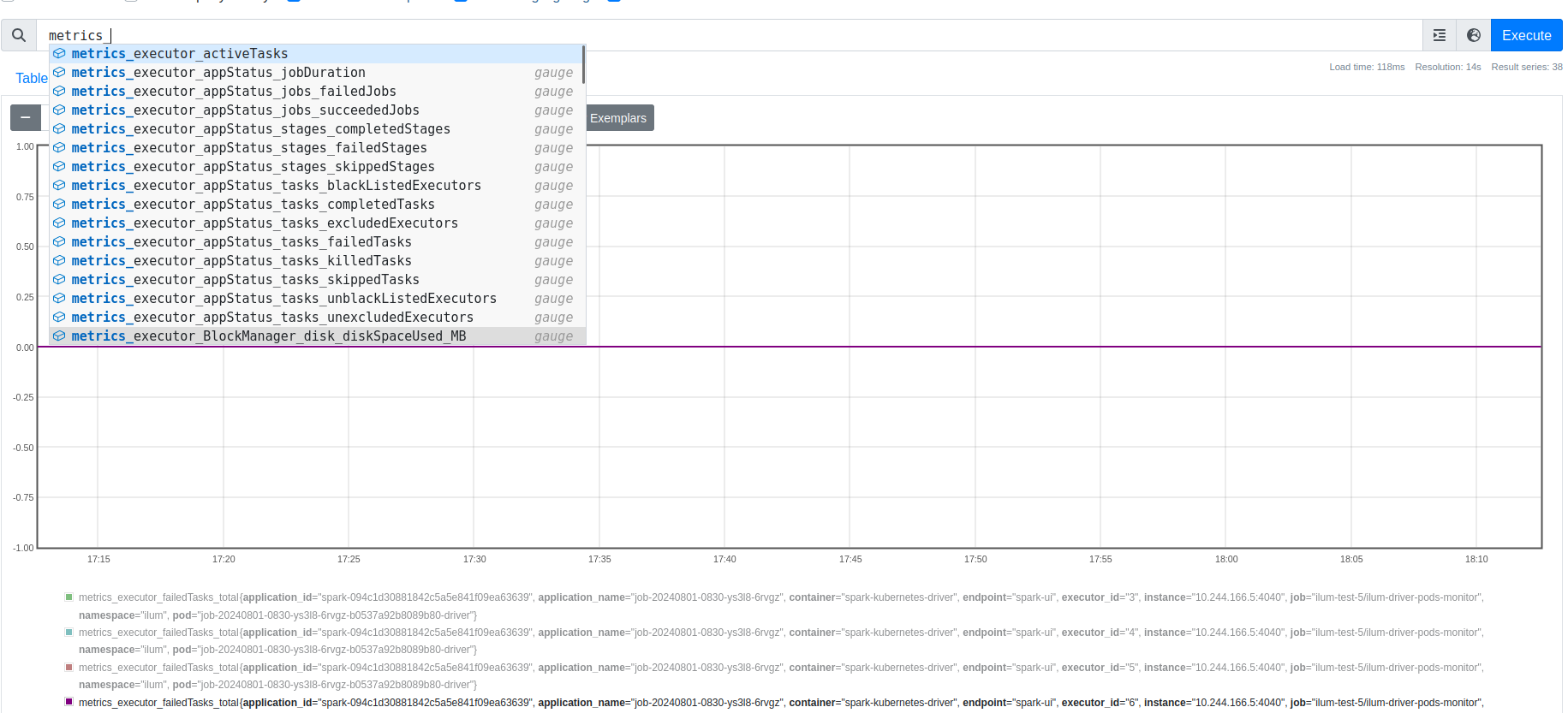

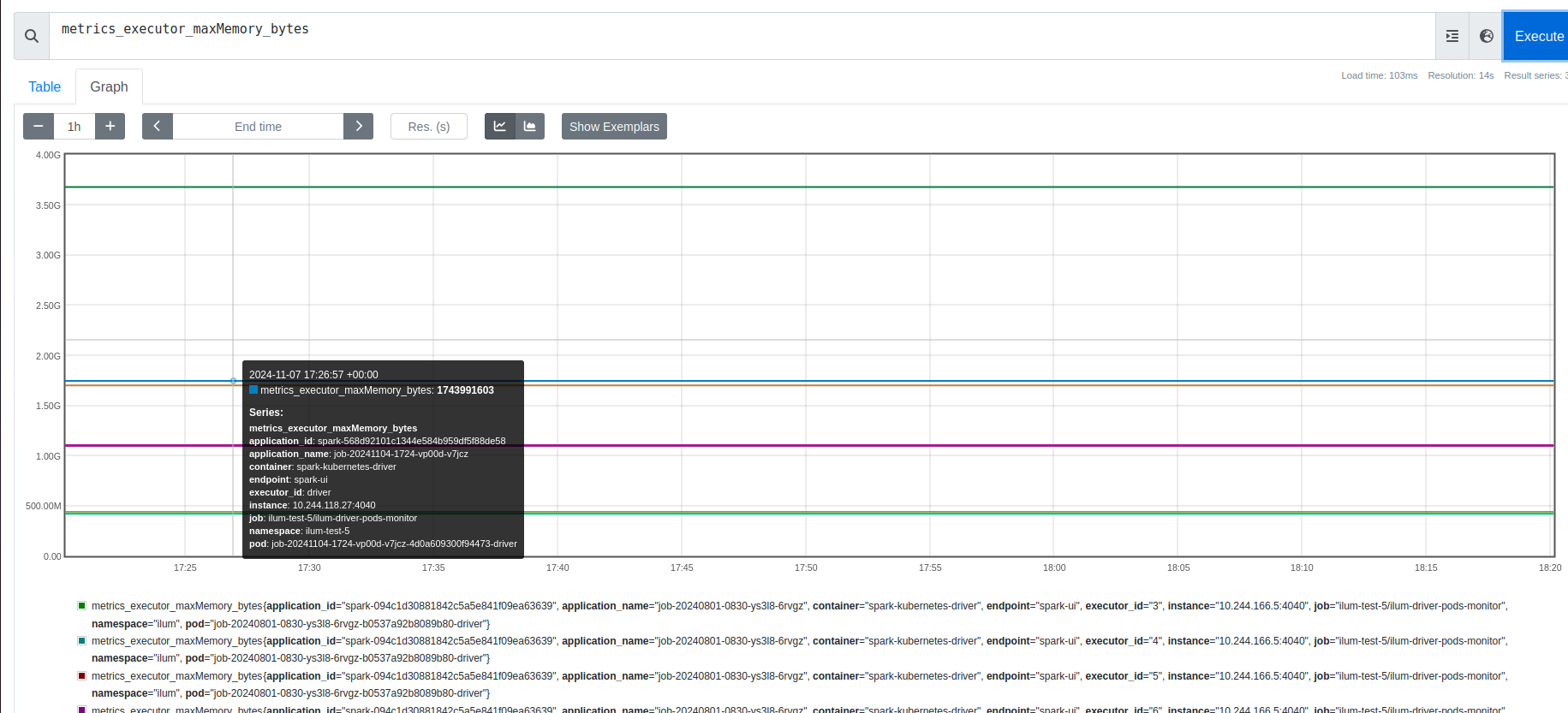

Go to Prometheus UI. Here can see table - environment, where you can execure PromQL queries

Type metrics into the input and you will be able to see all the metrics, that Prometheus pulled from Spark Apps

For example, you can type these queries to get different views on max memory used by executors:

#max memory used by each executor of each Ilum Job

metrics_executor_maxMemory_bytes

#max memory used in a given cluster namespace

max(metrics_executor_maxMemory_bytes{namespace="ilum"})

#other aggreagtions of maxMemory

min(metrics_executor_maxMemory_bytes)

avg(metrics_executor_maxMemory_bytes)

#max memory for specific job:

metrics_executor_maxMemory_bytes{pod="job-<id>-driver"}

Grafana

Overview

Grafana is a powerful and sophisticated data visualization tool that enables users to create a wide variety of interactive charts and dashboards. It is capable of fetching real-time data from multiple data sources through preconfigured plugins, making it a highly flexible and scalable solution for monitoring and analysis.

In the context of system and application monitoring, Grafana dashboards often integrate with popular data sources such as Prometheus for metric collection and Loki for log aggregation. This combination allows for seamless visualization of both metrics and logs in a unified interface, helping users quickly identify performance issues, troubleshoot problems, and gain insights into the overall health of their infrastructure and applications.

Deployment

Kube Prometheus Stack includes Grafana

which uses metrics scraped by prometheus or pushed to graphite to feed dashboards with data that can give better overview of your spark jobs. Ilum provides default dashboards to monitor spark jobs and ilum pods. They can be found in Ilum folder of grafana's dashboards.

You can access Grafana UI using the port-forward command:

kubectl port-forward svc/ilum-grafana 8080:80

and using admin:prom-operator as default credentials

Usage Examples

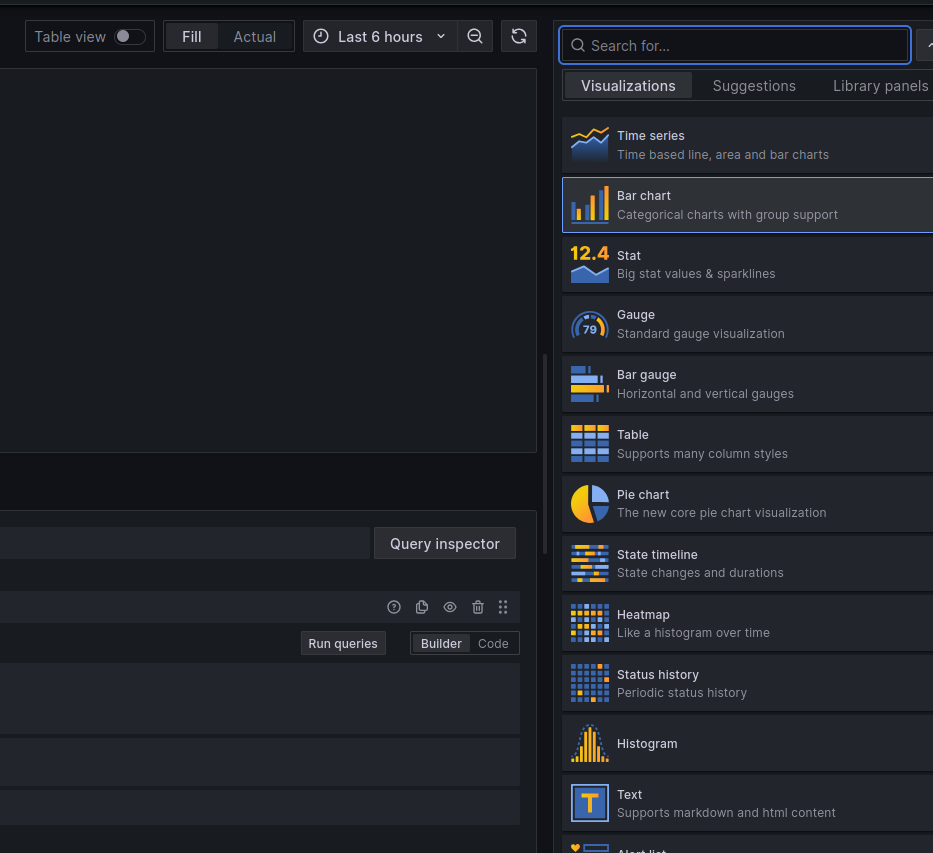

- Add the Datasource you want to use.

For example, to add Loki datasource, you only need to provide link to Loki: http://ilum-loki-gateway

- Create a Dashboard, choose desired chart type

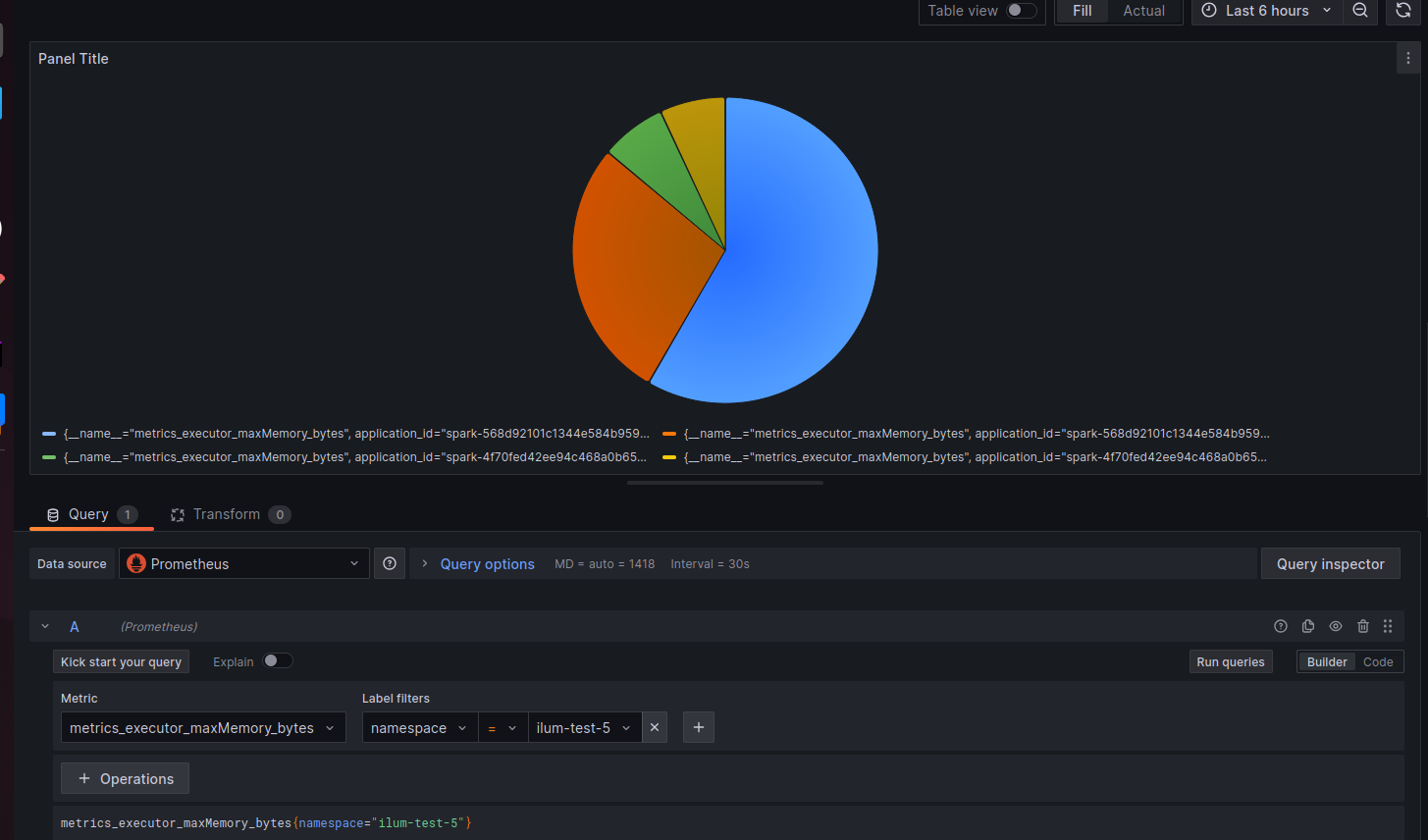

For example, we will choose preconfigured Prometheus Datasource and Pie Chart for visualization

- Create a Query you want to use

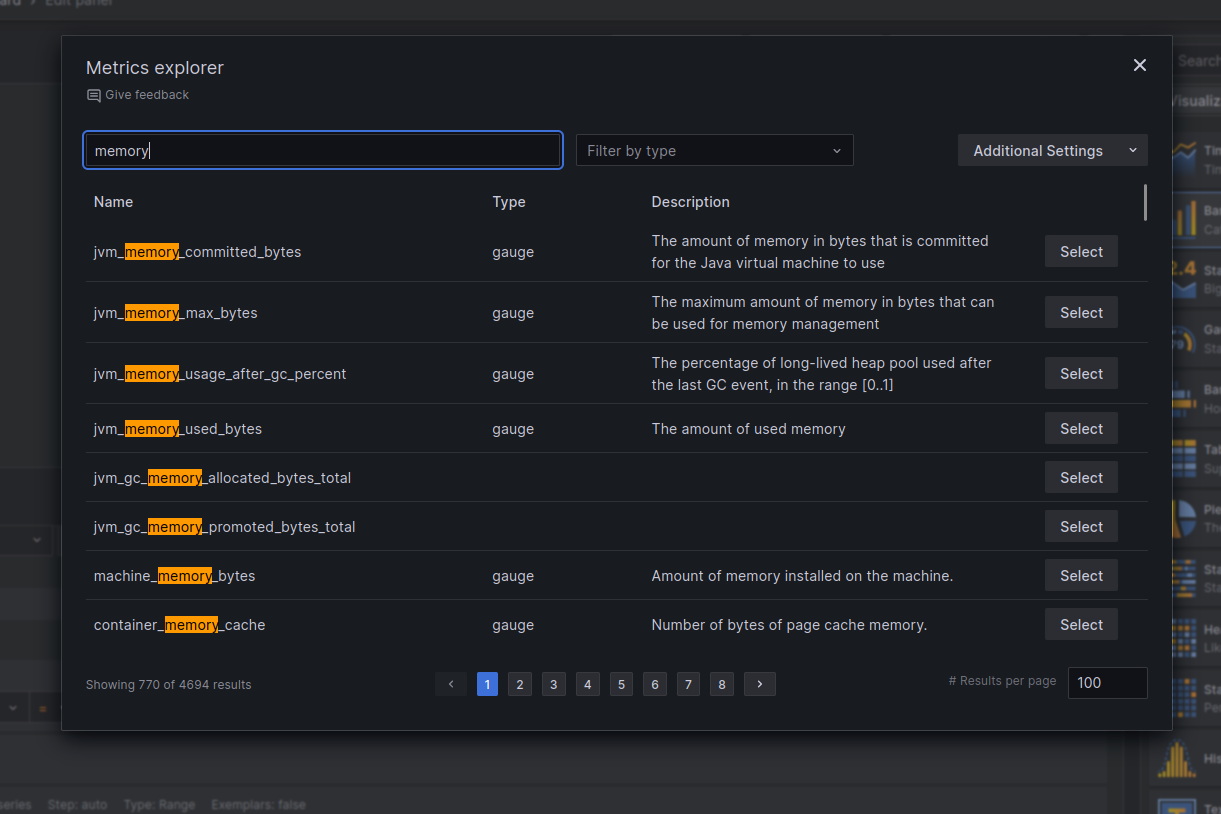

You can do this by constructor provided by Prometheus plugin. For example, you can find metrics using metric-explorer:

In this example we will use metrics_executor_maxMemory_bytes

After that we will set label namespace to take timeseries related only to current namespace.

Finally, we click run and see the results:

Preconfigured Dashboards

Preconfigured Dashboards gather information both in a different way in comparison to ones embedded in Ilum UI and in the same way.

You can find them by going to Dashboards > Ilum folder

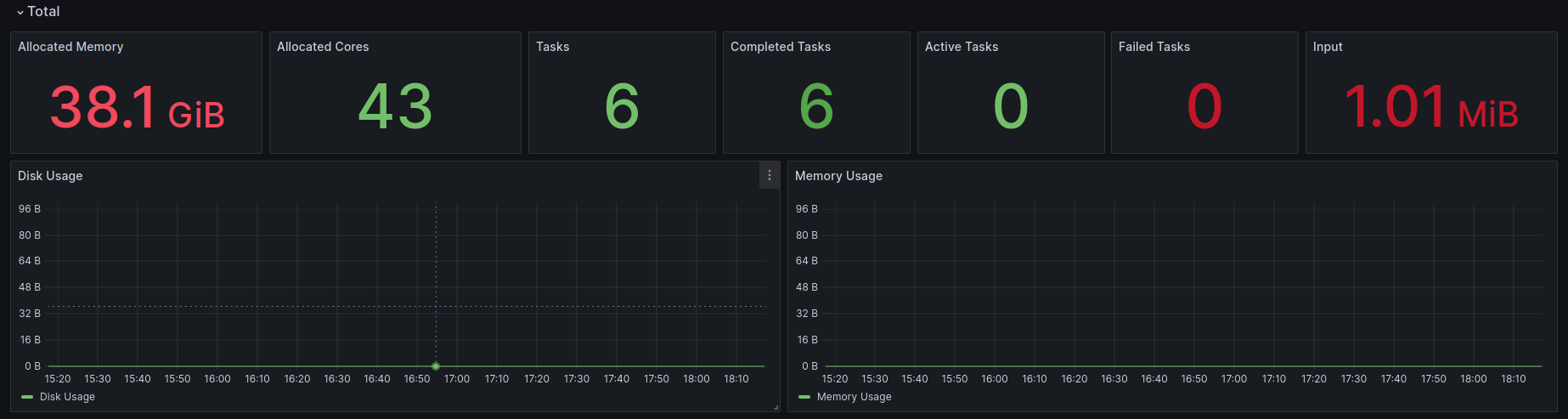

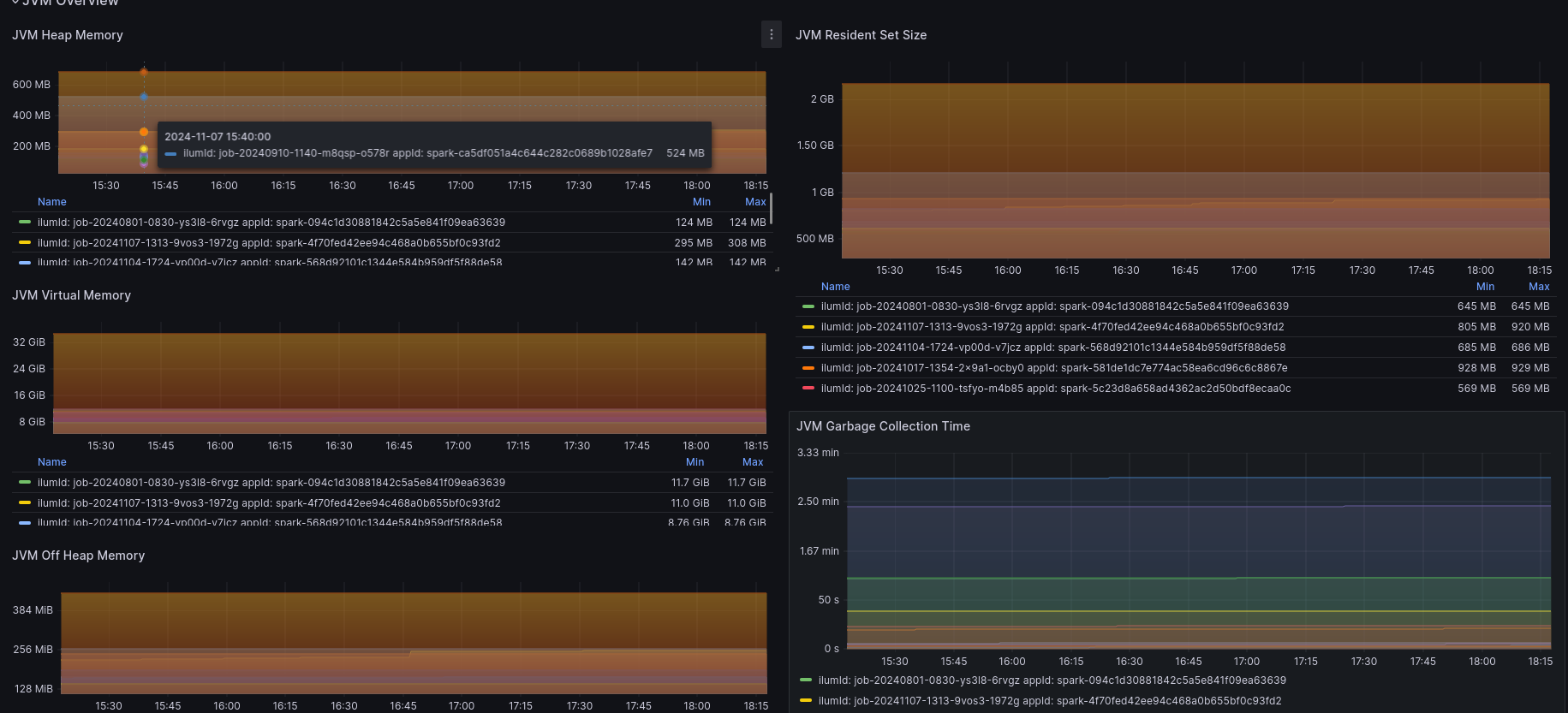

- Ilum Spark Jobs Overview

It contains information summed from all Ilum Jobs across a cluster:

- Allocated resources: CPU and cores

- Input, Disk and Runtime memory usage

- Task statistics: active, failed, interrupted and finished spark tasks

- JVM memory statistics

- Ilum Spark Job

It contains the same information, but about individual Ilum Jobs

- Ilum Graphite Spark Job

If contains information about individual Ilum Jobs gathered with Graphite

- Ilum Pod

This dashboard allows you to observe statistics about Ilum-Core

- cpu and memory usage, load and timing

- JVM statistics

- http statistics

Graphite

Overview

Graphite is an alternative solution for gathering time-series data, including metrics.

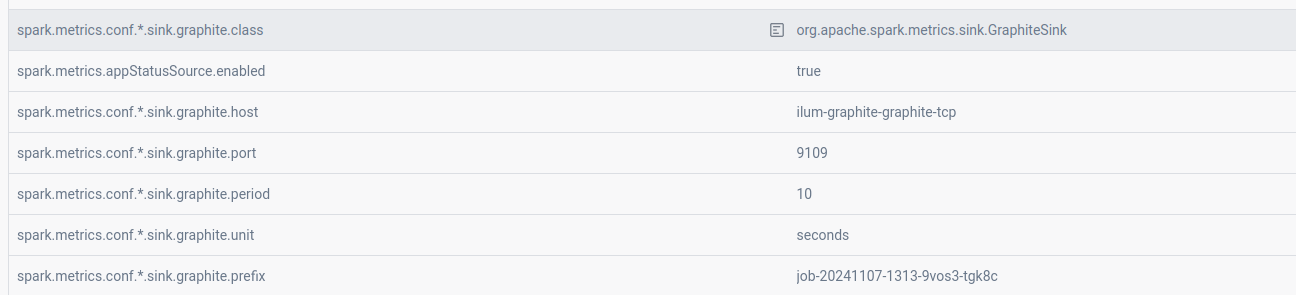

Graphite uses push-based model, therefore Ilum preconfigures spark sessions to push all the metrics to Graphite server like this:

Deployment

Graphite is not enabled in Ilum by default. You can enable it with this helm command:

helm upgrade ilum ilum/ilum \

--set ilum-core.job.graphite.enabled=true \

--set graphite-exporter.graphite.enabled=true \

--reuse-values

Usage

Graphite integrates seamlessly with Grafana, and Ilum simplifies this process for you. Ilum provides a pre-built dashboard that displays comprehensive metrics information for individual jobs, including:

- Task statistics

- Read and write statistics

- Memory and CPU statistics

- JVM statistics

Grafana uses the Prometheus Data Source to query metrics from Graphite.

To make use of the dashboard follow these steps:

- Enable the kube prometheus stack, if it is not enabled yet

helm upgrade \

--set kube-prometheus-stack.enabled=true \

--reuse-values ilum ilum/ilum

- Visit Grafana UI Run this command:

kubectl port-forward svc/ilum-grafana 8080:80

Go to localhost:8080

and use admin:prom-operator as default credentials

- Go to Ilum Graphite Spark Job Dashboard and type the id of Ilum Job, that you are interested in

Loki and Promtail

Overview

Loki is a log aggregation tool integrated into the Ilum infrastructure due to its cost-effectiveness and scalability. Key Features of Loki:

- Each component in the Loki architecture can be scaled independently based on specific needs.

- Logs are compressed to save storage space.

- Only metadata about the logs is indexed, not the logs themselves.

- Loki is a push-based system: it does not pull logs, but rather requires agents to push them.

- LogQL is the query language used to retrieve logs, which is similar to PromQL.

In the Ilum infrastructure, Loki is configured to store all log files and indexed metadata on Ilum-minio, the default storage.

Promtail is an agent responsible for collecting application logs and pushing them into Loki. The log collection process can be configured in the scrape_configs section. For example, you can configure a pipeline to define log sources, transformations, modifications, and filtering rules.

In Ilum, Promtail is preconfigured to listen to each Ilum Service and Ilum Job.

Deployment and configurations

To make use of Loki and Promtail you can run helm upgrade like this:

helm upgrade ilum ilum/ilum \

--set global.logAggregation.enabled=true \

--set global.logAggregation.loki.enabled=true \

--set global.logAggregation.promtail.enabled=true \

--reuse-values

LogQL

To retrieve queries saved to Loki, you can use LogQL language. In LogQL all logs are divided into logs streams. Each log stream is represented by its label set. For example, it can be a set of labels that identify application :

{app="job-20241107-1313-9vos3-1972g-9f73c39306c1c7b0-driver", namespace="ilum"}

You can use such labels as app, container, pod, namespace, ilum_jobId, ilum_groupId, spark_version, spark_app_name etc

When working with a log stream, you can apply aggregations functions:

{label:"value"} | max_over_time([1h])

count_over_time({label:"value"}[1h])

Lines matching:

#to retrieve lines with "INFO" in it

{label:"value"} |= "INFO"

#to exclude such lines

{label:"value"} != "INFO"

And even lines transformation

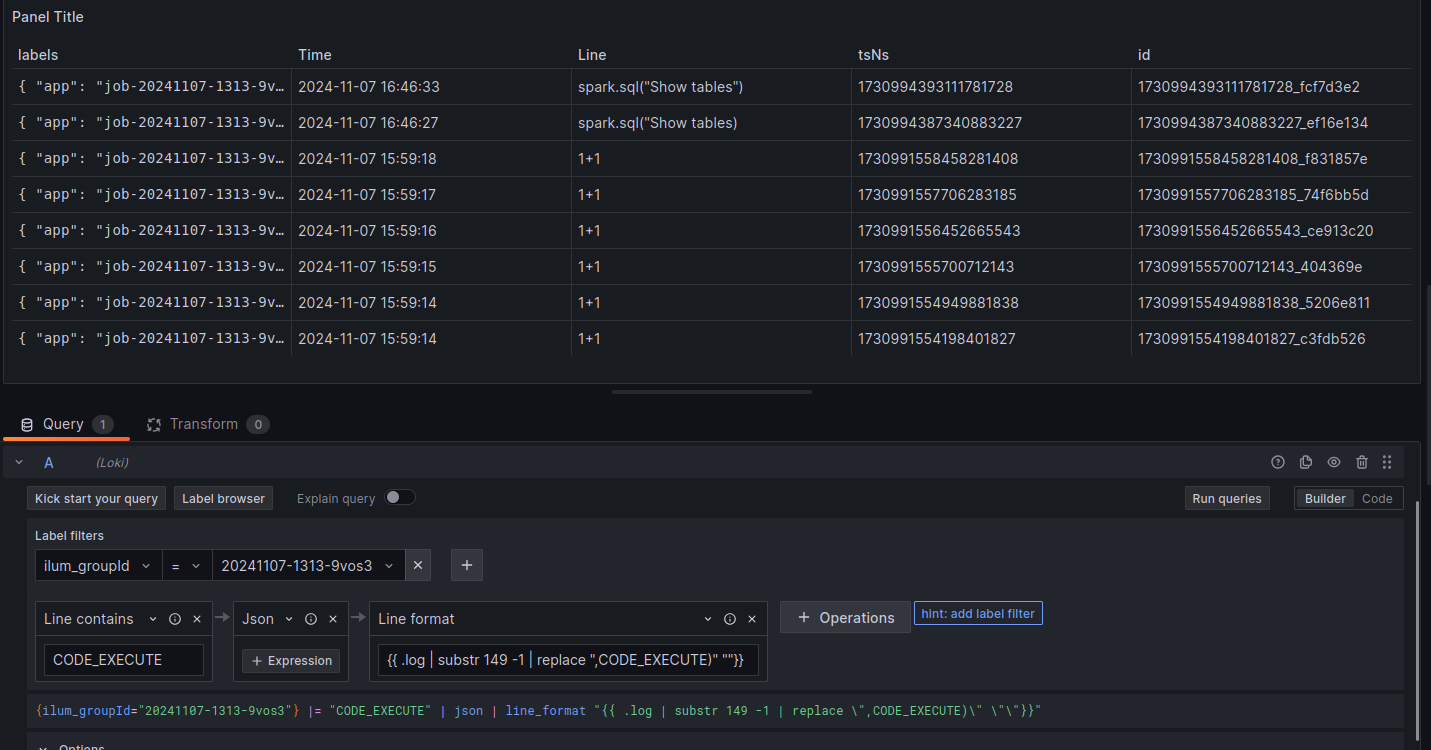

LogQL in Ilum Example

When working with Ilum Code Groups, the logs with used code are generated. Let's retrieve these logs

- Retireve logs of the group:

{ilum_groupId="20241107-1313-9vos3"}

- Filter out logs, that are not related to executed code:

{ilum_groupId="20241107-1313-9vos3"} |= "CODE_EXECUTE"

- Remove non-code text, so that only raw code would be left:

{ilum_groupId="20241107-1313-9vos3"} |= "CODE_EXECUTE" | json | line_format "{{ .log | substr 149 -1 | replace \",CODE_EXECUTE)\" \"\"}}"

Using Loki API

To execute read queries using Loki API you can send this GET request:

curl -G -s "http://<loki-endpoint>/loki/api/v1/query" \

--data-urlencode 'query={label1=value1}'

For example to run query from the example above, run:

kubectl port-forward svc/ilum-loki-gateway 8000:80

curl -G -s "http://localhost:8000/loki/api/v1/query" \

--data-urlencode '{app="job-20241107-1313-9vos3-1972g-9f73c39306c1c7b0-driver"} |= "CODE_EXECUTE" | json | line_format "{{ .log | substr 149 -1 | replace \",CODE_EXECUTE)\" \"\"}}"'

Using Loki with Grafana

- Enter Grafana UI: Type into command line

kubectl port-forward svc/ilum-grafana 8080:80

Enter localhost:8080

and use admin:prom-operator as default credentials

-

Add Loki Datasource To do this you need to add

http://ilum-loki-gatewayas a link to Loki -

Create a dashboard:

- choose Loki datasource

- choose Labels to retrieve log stream of your choise

- configure intervals and other parameters

- mofidy the query code the way you want

Final result for the example above can look like this: