How to add Kubernetes cluster to Ilum?

Introduction

Ilum makes it possible to manage multi-cluster architecture from single central control plane and it does all the configurations automatically. You only need to register your cluster in Ilum and configure networking a little bit.

This guide walks you through all the steps required to successfully launch your first Ilum Job on a remote cluster. While we will use GKE here, you can use any other cluster engine of your choice

Prerequisites

Before starting the tutorial:

- Install Kubectl

- Install Helm

- Register a GCS Account

- Install gcloud CLI tool

Step 1. Create a master cluster and a remote cluter

Master cluster will have all the Ilum Components inside of it. Remote cluster will be used to run our jobs remotely from master cluster.

Repeat the instructions below twice 2 create master and remote clusters.

Create a project

- Go to GCS Console

- Click at project selector at a top left corner

- In appearing window select "New Project"

- Type name for your project and click "Create"

- Then select your project in a project selector

Enable Kubernetes Engine

- Type

Kubernetes enginein search bar. - Click on

Kubernetes Engine. - Click

Enablebutton.

Switch to chosen projet

- In GCS Console click at project selector and copy ID of your project

- Go to the console

- Switch to the project that you created using ID from project selector in this command:

gcloud config set project PROJECT_ID

Create a cluster

gcloud container clusters create master-cluster \

--machine-type=n1-standard-8 \

--num-nodes=1 \

--region=europe-central2

Keep in mind that Ilum is a resource-intensive application, and you won't be able to use it properly unless both the master and remote clusters have sufficient resources. In GCS, this can be specified using the --machine-type flag when creating a cluster. Choose a machine type and the number of nodes to ensure you have at least 8 CPUs and 32GB of memory on each of the clusters

Step 2. Set up Ilum on Master Cluster

Switch to the master cluster config in kubectl

# get kubectl contexts and find the one with your cluster

kubectl config view

# swtich to it using this command

kubectl config use-context MASTER_CLUSTER_CONTEXT

Create namespace and switch to it in kubectl

kubectl create namespace ilum

kubectl config set-context --current --namespace=ilum

Install Ilum using helm charts:

helm repo add ilum https://charts.ilum.cloud

helm install ilum -n ilum ilum/ilum --version 6.2.0

Step 3. Set up authorization in remote cluster

To add remote cluster to Ilum, we need to register Client Certificate in remote cluster and link the user of certificate to sercurtiy role.

Create a Client Key

openssl genpkey -algorithm RSA -out client.key

Create Certificate Request:

Replace myuser with the username of your choice

openssl req -new -key client.key -out csr.csr -subj "/CN=myuser"

Register CSR using yaml

Write csr.yaml:

apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

name: myuser

spec:

request: <put here encoded base64 csr>

signerName: kubernetes.io/kube-apiserver-client

usages:

- client auth

Replace myuser with the username of your choice

You can get our CSR encoded like this:

cat csr.csr | base64 | tr -d '\n'

Finnaly apply yaml file in kubectl:

kubectl apply -f csr.yaml

Get the certificate

Approve the Certificate Signing Request:

kubectl certificate approve myuser

Retrive the certificate value decoded into a file client.crt:

kubectl get csr myuser -o jsonpath='{.status.certificate}' | base64 --decode > client.crt

Get CA certificate and server url

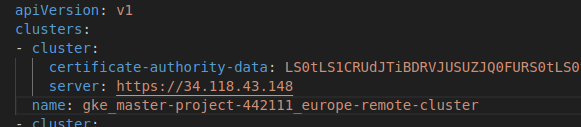

Go to kubeconfig file. Usually it is stored on path ~/.kube/config

For exapmle here:

Copy the server URL and save CA certificate as a file ca.crt:

echo "past encoded ca certificate here" | base64 --decode > ca.crt

Bind Role to your user by creating kubernetes Role-Binding

Create file rolebinding.yaml:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: myuser-admin-binding

subjects:

- kind: User

name: myuser

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

And apply it:

kubectl apply -f rolebinding.yaml

Note: In this example we have bound user to the admin role, however you can create your own Role and manage the privileges the way you want.

Test authorization using kubectl

To do this you should create user with certificate and key in kubeconfig and then create a context with your cluster and this user

Move ca.crt, client.crt and client.key to /home/your-linux-user/remote-cluster

mkdir /home/your-linux-user/remote-cluster

mv ca.crt client.crt client.key /home/your-linux-user/remote-cluster

Open kubeconfig in the path ~/.kube/config

In the users list add this user:

- name: myuser

user:

client-certificate: ~/remote_cluster/client.crt

client-key: ~/remote_cluster/client.key

In the context list add this context:

- context:

cluster: <your cluster name from clusters list>

namespace: default

user: myuser

name: remote-cluster

Cluster name can be taken from clusters list in the same file.

To test if it works, run:

kubectl config use-context remote-cluster

kubectl get pods

If the result is

No resources found in default namespace.

or a list of pods in your cluster then everything works!

Manage Service Account

A Service Account is the Kubernetes equivalent of a user for pods. It is used by ilum-core and Spark jobs. For example, the service account is used by the Spark driver to deploy Spark executors.

By default, the service account used is the default, but it may not have sufficient privileges.

To resolve this issue, you can create a role-binding, similar to what we did before with the user, to grant the necessary permissions. Create a sa_role_binding.yaml file:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: myuser-admin-binding

subjects:

- kind: User

name: myuser

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io⏎

And then create a role-binding of admin role to default service account:

kubectl apply -f sa_role_binding.yaml

Note: you can create a custom ServiceAccount, bound it to your custom role and use it instead of default service account. Just remember to specify the ServiceAccount that you are using in spark configurations:

spark.kubernetes.authenticate.driver.serviceAccountName=YourServiceAccount

Step 4. Add Cluster to Ilum

Here you can see a demo on how to add a kubernetes cluster to Ilum Guide in Full Screen

Go to cluster creation page

- Go to Clusters section in the Workload

- Click New Cluster button

Specify general settings:

- Choose a name and a description that you want.

- Set cluster type to Kubernetes

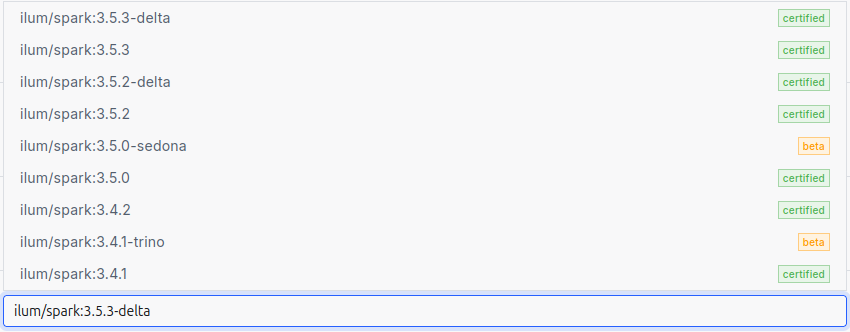

- Choose a spark version

The Spark version is specified by selecting an image for the Spark jobs.

Ilum requires the use of its own images, which include all the necessary dependencies preinstalled.

Here is a list of all available images:

Specify spark configurations

The Spark configurations that you specify at the cluster level will be applied to every individual Ilum job deployed on that cluster.

This can be useful if you want to avoid repeating configurations. For example, if you are using Iceberg as your Spark catalog, you can configure it once at the cluster level, and then all Ilum jobs deployed on this cluster will automatically use these configurations.

Add storages

Default cluster storage will be used by ilum to store all the files required to run Ilum Jobs both provided by user and by ilum itself.

You can choose any type of a cluster: S3, GCS, WASBS, HDFS. In the example above we add S3 bucket

- Click on the "Add storage" button

- Specify the name and choose the S3 storage type

- Choose Spark Bucket - main bucket used to store Ilum Jobs files

- Choose Data Bucket - required in case you use Ilum Tables spark format

- Specify S3 endpoint and credentials

- Click on the "Submit" button

You can add any number of storages, and Ilum Jobs will be configured to use each one of them.

Go to Kubernetes section and specify kubernetes configurations

Here, we provide Ilum with all the required details to connect to our Kubernetes cluster. The process is similar to connecting to the cluster through the kubectl tool, but here it is done through the UI.

Following the example above, you will need to provide the following:

- Authority certificate (ca.crt)

- Client certificate (client.crt)

- Client key (client.key)

- Server URL

- Client username (e.g., myuser in the example above)

Once you have specified these five items, Ilum will be able to access your Kubernetes cluster.

In addition, you can:

- Configure Kubernetes to require a password for your user. In this case, you will need to specify the password here in the UI.

- Add a passphrase when creating the client key. If you did so, you will need to specify the passphrase here in the UI.

- Specify the key algorithm used in the client.key. This is not obligatory, as this information is usually stored within the key itself. However, there may be cases where you need to explicitly define it.

Finally, you can click on the Submit button to add a cluster.

Step 5. Networking

Unfortunately, giving Ilum access to run jobs on your cluster is not enough yet. Ilum Jobs have to communicate with Ilum Core server via grpc. Cron Jobs created by Ilum Schedules must access Ilum Core server to launch Ilum jobs at a specified time. To gather metrics, Ilum Jobs have sends their data into event log, which is located on the main storage of Ilum.

Therefore, not only you should give master cluster access to additional clusters, you should give access to some services from master cluster. Here we will cover one of possible way to do that.

*Note: In this guide we are not going to get deep dive into netwowrking and security. We are going to provide you with instructions on how to make jobs running on your remote-cluster in a simpliest way possible, so that you could grasp a concept. *

Configure Firewall

First of all, you need to allow for incoming traffic into your GKE master-cluster. The Google Cloud Project the traffic access is managed by its firewall. The firewall can be managed by rules. To allow remote-cluster to access master-cluster services, we need to add this rule to the project of our master-cluster:

gcloud compute firewall-rules create allow-ingress-traffic \

--network default \

--direction INGRESS \

--action ALLOW \

--rules tcp:80,tcp:443 \

--source-ranges 0.0.0.0/0 \

--description "Allow HTTP/HTTPS

This firewall rule allows for incoming traffic from anywhere inside of your master-cluster.

Exposing services from master cluster

You need to expose services in the master cluster to the outside world. To achieve this, change their type to LoadBalancer. Ilum makes this process straightforward using Helm configurations.

For example, to expose Ilum Core to the outside world, you can simply run the following command in your terminal:

helm upgrade ilum -n ilum ilum/ilum --version 6.2.0 --set ilum-core.service.type="LoadBalancer" --reuse-values

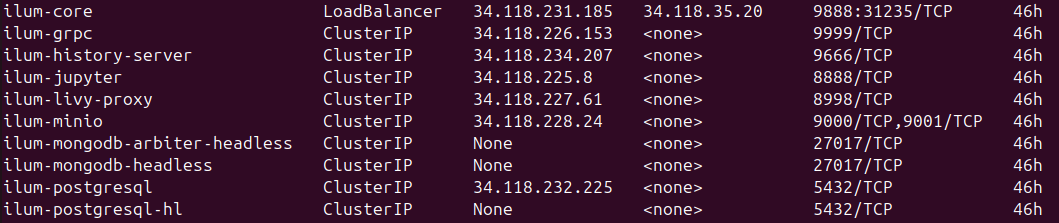

After that you should wait a few minutes and then check your services by running

kubectl get services

to see this:

Here you can notice the ilum-core service changed its type to LoadBalancer and got public ip.

Now you can go to http://<public-ip>:9888/api/v1/group to check if everything is okay.

*Note: Almost each ilum service in helm can be configured using ilum-component.service.property="value". In case you want to learn more how to configure services, you should visit Ilum Artifact hub with our helm charts.

*

Create External Services in remote cluster

Finally you should bind the public ip of the service with local domain name in the remote-cluster.

For example, here we would want to be able to access the ilum-core from remote cluster by ilum-core:9888.

To do this we should create External Service.

Create a yaml file ilum-core-external-service.yaml:

apiVersion: v1

kind: Service

metadata:

name: ilum-core

spec:

type: ExternalName

externalName: <public-ip>

ports:

- port: 9888

targetPort: 9888

Remember to replace <public-ip> with the public ip of your service.

After that you can create this service in the remote cluster by running

kubectl apply -f ilum-core-external-service.yaml

Basic networking configuration

To make use of ilum jobs and schedules, you should expose ilum-core, ilum-grpc and ilum-minio (in case you are using it as a default storage).

Ilum grpc is used constantly by Ilum Jobs to inform about state change.

Ilum core is used by Cron Jobs to launch Ilum Jobs at a specified point in time.

Ilum Minio is used to store event log used by Ilum History Server to monitor spark jobs.

- Expose the services as load balancers in the master cluster:

helm upgrade ilum -n ilum ilum/ilum --version 6.2.0 \

--set ilum-core.service.type="LoadBalancer" \

--set minio.service.type="LoadBalancer" \

--set global.grpc.service.type="LoadBalancer" \

--reuse-values

- Create External Services in remote cluster

Create file external_services.yaml:

apiVersion: v1

kind: Service

metadata:

name: ilum-grpc

spec:

type: ExternalName

externalName: <ilum-grpc-public-ip>

ports:

- port: 9999

targetPort: 9999

---

apiVersion: v1

kind: Service

metadata:

name: ilum-core

type: ExternalName

externalName: <ilum-core-public-ip>

ports:

- port: 9888

targetPort: 9888

---

apiVersion: v1

kind: Service

metadata:

name: ilum-minio

spec:

type: ExternalName

externalName: <ilum-minio-public-ip>

ports:

- port: 9000

targetPort: 9000

Components in multi-cluster architecture

If you are using a component like Lineage in your master cluster, you will not be able to launch Ilum Jobs in a remote cluster unless you expose the component to the remote cluster. You can do this following the example mentioned earlier.

Components usable in multi-cluster architecture with additional networking configuration:

- Hive Metastore

- Marquez

- History Server

- Graphite

Services restricted to single-cluster architecture:

- Kube Prometheus Stack: Prometheus needs direct access to every Ilum Job pod to gather metrics data. This is challenging to implement in a multi-cluster architecture with dynamically appearing pods.

- Loki and Promtail: Promtail collects logs similarly to Prometheus and faces the same limitations in multi-cluster environments.

Note: All other services not listed above are independent of the number of clusters used and can function in either single- or multi-cluster setups.